The following began as a response to @andrewstone’s review on Reddit, but it probably belongs here (and it’s also too long for me to post there  ).

).

Comparisons with the Group Tokenization Proposal (Previously OP_GROUP)

Hey @andrewstone, thanks for reviewing! Sorry I missed your review earlier.

Aside: is there some other public forum where OP_GROUP has been discussed in technical detail before? (I see a few reddit threads, but they’re pretty thin on substance.) Also is this Google Doc the primary spec for Group Tokenization right now?

Before getting into details, I just wanted to clarify: I believe hashed witnesses are the smallest-possible-tweak to enable tokens using only VM bytecode. My goal is to have something we can confidently deploy in 2022. I don’t intend for this to stop research and development on other large-scale token upgrades.

If anything, I’d like to let developers start building contract-based tokens, then after we’ve seen some usage, we’ll find ways to optimize the most important use cases using upgrades like Group Tokenization.

Some responses and questions:

Group Tokenization increases a transaction’s size by 32 bytes per output. So pretty much the same in terms of size scalability. (And the latest revision includes a separate token quantity field, rather than using the BCH amount. This is a design decision that I assume CashTokens will also need).

Hashed witnesses increase the size by 32 bytes per input rather than output. and token transactions will typically only need one. Do you have any test vectors or more implementation-focused specs where I can dig into the expected size of token mint, redeem, and transfer transactions with Group Tokenization?

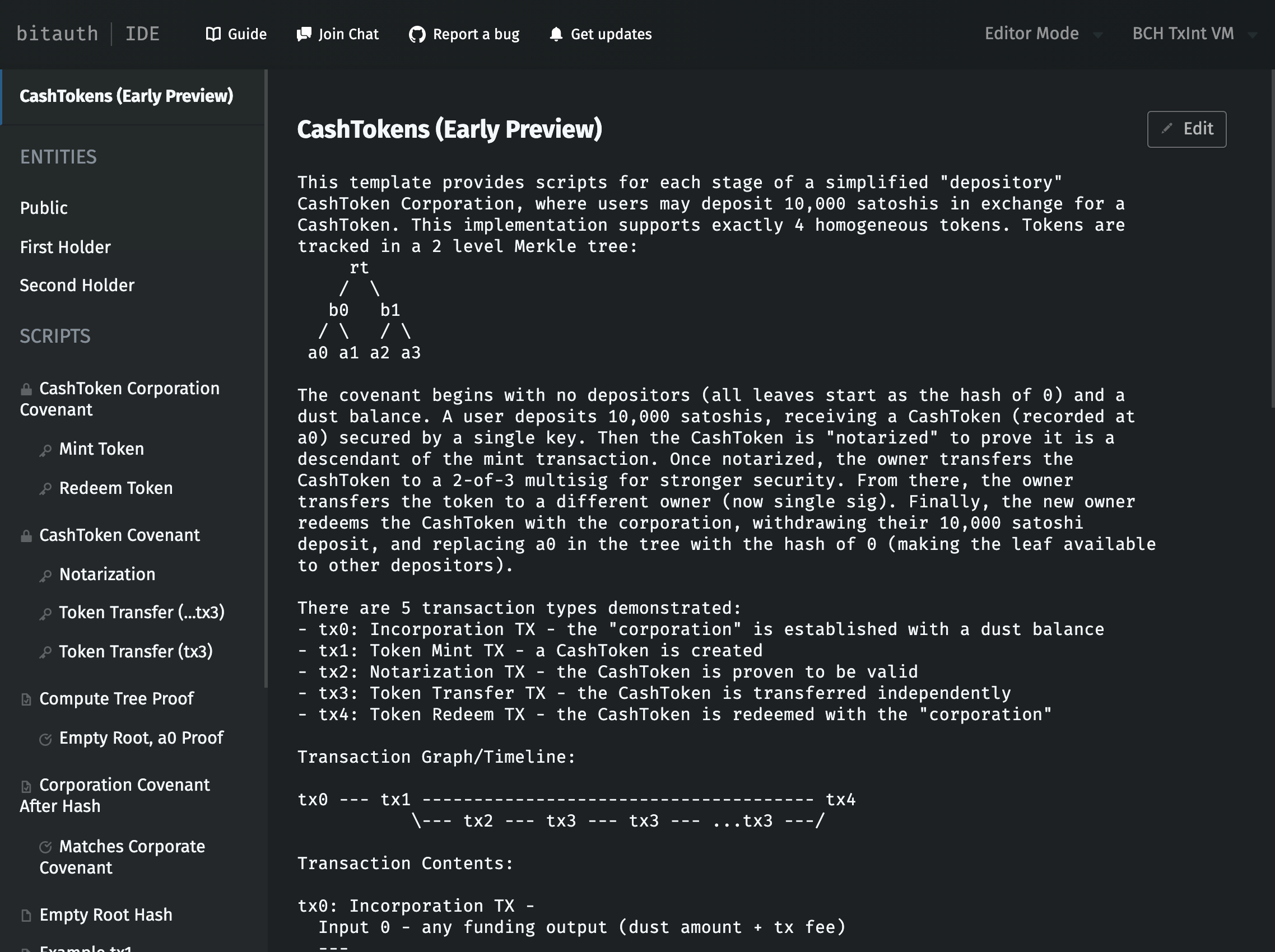

For CashTokens, a 32-level merkle tree proof (>4 billion token holders) requires 192 bytes of redeem bytecode and 1024 bytes of proof. That overhead would apply only to mint and redeem transactions. For transfers, the inductive proof requires only the serialization of the parent and grandparent transaction, so at ~330 bytes each, the full unlocking bytecode is probably ~1,000 bytes. (If you’re curious, you can review the code in the CashTokens Demo.)

On quantity/amount: I didn’t propose a new quantity field since it’s pretty easy to use satoshis to represent different token quantities for CashTokens which need to support it. And it’s easy for covenants to prevent users from incorrectly modifying the value.

But on the CPU use side there’s need to verify the inductive proof so this CashTokens would use more processing power.

Verifying the proof costs less than verifying a signature – two OP_HASH256s and some OP_EQUALs. There’s no fancy math: we just check the hash of the parent transactions, then check that they had the expected locking bytecode. (Check out the demo, it’s quite easy to review.)

Both require a hard fork. Since CashTokens is creating a new transaction format it is likely a larger change.

This seems… unlikely

The Group Tokenization spec is ~24 pages (~43 pages with appendices and examples), and that doesn’t include a lot of implementation detail. The PMv3 proposal is just a few pages, and includes a full binary format breakdown/comparison and test vectors.

Also, doesn’t the latest Group Tokenization spec also include a new transaction format? “We define a new transaction format that is used for transactions with groups (or with BCH).”

The inductive proof logic is also extremely critical code, even though it seems to not be part of miner consensus. Any bug in it (in any client) would allow transactions to be committed to the blockchain the appear valid on that client (but no others). In the context of SLP, I call this problem a “silent fork” (clients have forked their UTXO set – that is, their tracking of who owns what coins – but the blockchain has not forked). In many ways a silent fork is worse than an accidental blockchain fork because the problem can remain latent and exploited for an indeterminate amount of time. In contrast, a bug that causes an accidental blockchain fork is immediately obvious.

The inductive proof actually happens entirely in VM bytecode (“Script”) – if different implementations have bugs which cause the VM to work differently between full nodes, that is already an emergency, unrelated to CashTokens.

If wallets have bugs which cause them to parse/sign transactions incorrectly, their transactions will be rejected, since they won’t satisfy the covenant(s). (Or they’ll lose access to the tokens, just as buggy wallets currently lose access to money sent to wrong addresses).

On the other hand, the “silent fork” you described is possible with Group Tokenization. Group Tokenization is a much larger protocol change, where lots of different clients will need to faithfully implement a variety of specific rules around group creation, group flags, group operations, group “ancillary information”, “group authority UTXOs”, authority “capabilities”, “subgroup” delegation, the new group transaction format, “script templates”, and “script template encumbered groups”. It’s plausible that someone will get something wrong.

Again, my intention is not to have the Group Tokenization proposal abandoned – a large-scale “rethink” might be exactly what we need. I just want a smaller, incremental change which lets developers get started on tokens without risking much technical debt (if, e.g. we made some bad choices in rushing out a version of Group Tokenization).

One scalability difference is that in the Group tokenization proposal, the extra 32 bytes are the group identifier. So they repeat in every group transaction, and in general there are a lot more transactions than groups. They will therefore be highly compressible in disk or over the network, just by substituting small numbers for the currently most popular groups (e.g. LZW compression).

However, each inductive proof hash in CashTokens is unique and pseudo-random so therefore not compressible.

Yes, and moreover, the largest part of CashToken transactions will be their actual unlocking bytecode, since the network doesn’t keep track of any state for them. That’s why they are so low-risk: Hashed Witnesses/CashTokens specifically avoid making validation more stateful.

Without a doubt, a solution which makes transaction validation more stateful can probably save some storage space, but even comparing to current network activity, CashTokens would be among today’s smaller transactions. CashFusion transactions are regularly 10x-100x larger than CashToken transactions, and we’re not urgently concerned about their size. And CashToken fees will be as negligible as expected on BCH: users will pay fractions of a cent in 2020 USD per transaction.

There’s some huge misunderstanding that Group Tokens impacts scalability. It doesn’t, except in the sense that the blockchain is now carrying transactions for both BCH and USDT (for example). No token protocol will be able to have on-chain tokens without having token transactions! I’ve come to believe that people who take this scalability argument are being disingenuous – they should just say they don’t want tokens – rather than concern trolling.

Described that way – that BCH should be for BCH only – I can respect but entirely disagree with that argument. And I think that the market agrees with me based on the performance of BCH over the last few years.

To be fair, the original OP_GROUP proposal had a far a larger impact on scaling, and people might not know there’s a new spec. Also, the latest spec reduces the amount of new state introduced into transaction validation, but it’s still not “new state"-free. There are real tradeoffs being made, even if you and I agree that tokens might be worth it.

A smaller, easily-reviewed change like PMv3 would give us a great opportunity to showcase the value of contract-integrated tokens on BCH. If we see a lot of on-chain applications, larger upgrade ideas like Group Tokenization will probably get more interest.

And with an active token-contract developer community, we’d have a lot more visibility into the token features BCH needs to support!