Alright, here I go with some opinions. I’ll qualify this immediately by saying I’m sometimes wrong, I am not a Bitcoin historian and I don’t have as deep an appreciation of why things are the way they are, as some others in the Bitcoin Cash community.

I’ll do your qestions in reverse:

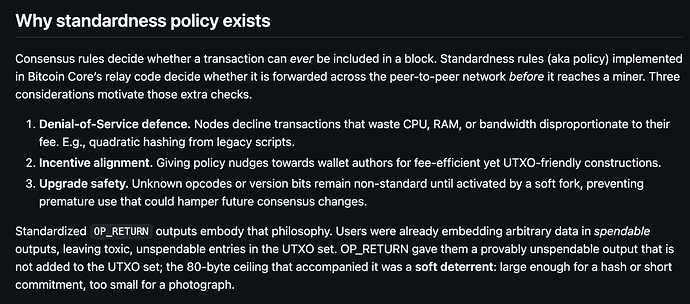

Why is there a separation between consensus rules & node relay policies

IMO: the relay policies offer a protective layer with two functions:

- originally, to restrict the mainstream use cases to those transactions which is better understood in terms of performance impact on the network, i.e. more safe to scale

- layered defense - policy changes can often be adapted quickly and independently by node operators to mitigate new threats. Changes within policy scope do not cause chain splits. Whereas consensus is consensus - changing it is harder and requires wide coordination.

Is there a “good reason” for non-standard transactions?

I would see it in being able to push the envelope in terms of new use cases that can be allowed to develop while being challenged in the real economic environment - something which may not be revealed on testnets despite best intentions. Sort of an entry gate into later becoming accepted into standardness once they’ve proven themselves to be non-problematic. In practice I’m not sure we can say that there are good enough checks and balances in case some non-standard transactions turn out to cause problems.

But maybe someone else has a better reason than I do for non-standard. Of course the dichotomy arises directly from having the distinction between what policy permits and what consensus permits. Non-standard being just the term for that which is allowed by consensus but not by relay policy. So this boils down to “why allow such a bifurcation between relay policy and consensus in the first place”, which is again your first question, so … somewhat circular.

Should we also consider fixing non standard issues by changes straight into consensus? Do we need to, or have we already?

We can consider, but I don’t know that the need is very great, indeed if we have to ask this question. I do hear some people argue in certain specific cases, such as the discrepancy between max standard tx size vs. max consensus tx size, that this poses difficulties for some protocols and an alignment would be good.

Alignment would generally reduce complexity in code bases, and I would consider that a large benefit but it has to be done very thoughtfully of course. One doesn’t want to haphazardly relax rules that might serve a protective function, without being quite certain that exploitation is impossible or costly enough to deter.

And any alignment of policy <-> consensus comes with the cost of changing many codebases that have baked these rules in either way.

Is this something the BCH community should try to be ahead of the game on?

We should be ahead in all areas, so my answer is YES and I love the initiative to table this for discussion.

However, I think it’s less urgent and a much broader and long-winded topic than looking at the VM limits (CHIP) which has an analytical approach already and is more well-defined in terms of the benefits. I don’t think we in BCH have a burning issue here, unlike Core and the BSV crowd. For now, our challenge is to not fuck things up rather than unfucking things, and I think by filtering changes through the CHIP process carefully enough, we might be able to keep it that way