What if now we get hash(blockA) == hash(blockB)? Bitcoin security relies on that being computationally impossible to achieve. 256-bit collision is a 2^128 problem and should not happen, not even once.

The assumption is always: if hashes are the same then the contents are the same. If that were not the case then the whole system would be broken.

Ah damn, they are comparing hashes and not difficulties.

Stupid me, please disregard my deleted comment.

I will update the previous post.

I tend to accept this as the right answer. George contributed the section; I checked the formulas; then I did some editing on the text. I wrote “z represents bandwith (bytes per second)”. This does not make much sense as we’re adding seconds and bytes²/seconds.

Can you follow the math when reading “z represents seconds per byte” instead?

Regarding the cloned summary problem:

You are correctly identifying a DoS problem when Tailstorm and (Parallel Proof-of-Work in general) are implemented naively.

I’m aware of multiple solutions to this problem, and you’re on the right track with your solution attempts.

First. In my first version of parallel proof-of-work, the summary requires a signature of the miner you produced the smallest (as in lowest hash) vote (= weak block). With this setup, it’s easy to prevent summary cloning as you know exactly who did the cloning. Just burn the rewards if you see two conflicting summaries from the same miner ending the same epoch.

Second. The implementation of Tailstorm (@Griffith 's work) ensures that summaries follow deterministically from the referenced sub blocks. You cannot clone the summary because there is only one. This detail did not make it to the final submission paper.

Third. You accept that the underlying broadcast network requires some sort of DoS protection anyways and reuse it to mitigate the problem you describe. This is why I decided not to describe Tailstorms careful mitigation in the paper.

Fourth. You delay propagation of the summary until the next proof-of-work is solved. I.e. you reject propagating summaries which do not have any confirming weak blocks yet.

Fifth. When you choose the forth approach, you can as well merge the summary with the next weak block. In that scenario the strong block / summary has a list of transactions and a proof-of-work. This is what I do in the latest protocol version.

Very well.

This problem is solved then.

Would be great if @Griffith could confirm the below:

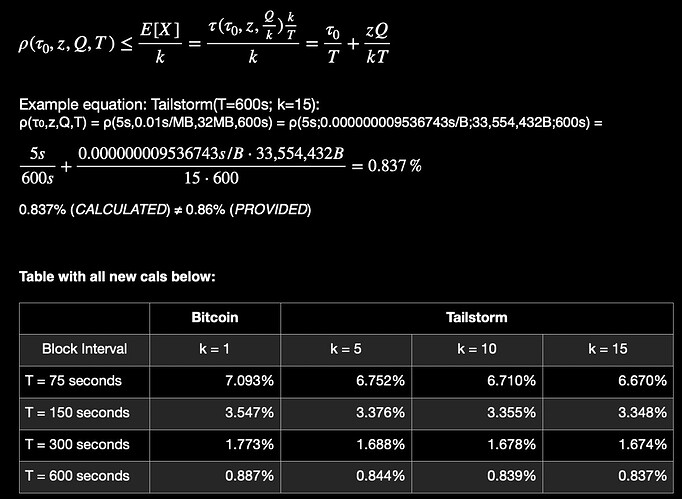

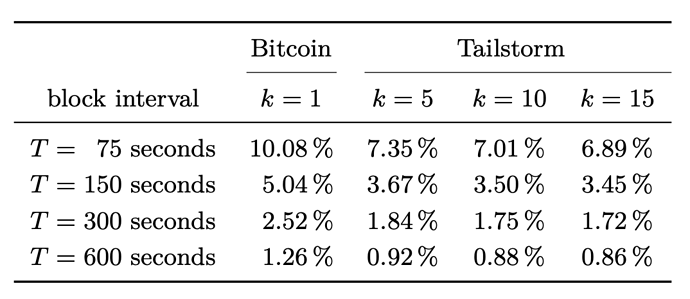

I did the calcs, and the numbers in the table don’t line up. They are all directionally correct, but do not come close to the stated results in the lower k values. See new summary table below (based on Formula 4):

I’m thinking I’m doing something wrong here as the numbers do not have nearly the variance shown in the original here:

Regarding your Invalid UXTO spending spam problem, I’m not sure I can follow.

First off, Tailstorm implementation uses k=3 but I think one should set a higher value. 10 second sub-block interval seems about right. The first Parallel PoW paper has an optimization suggesting that k=51 maximizes consistency after 10 minutes, assuming strong block interval 10 seconds, worst case propagation delay of 2 seconds and an attacker with 1/3 hash power. See Table 2 In the AFT '22 paper for other combinations of assumptions.

[ Your server is complaining I’m posting too many links ]

I’m loosing you where you say that 15 weak blocks will be mined in parallel. Tailstorm is linear when possible and parallel when needed. Miners share their weak blocks asap. Miners extend the longest known chain of weak blocks. Mining 2 blocks in parallel requires the second block not yet being propagated. It’s typical to assume worst-case propagation delays of a couple of seconds. Now, 15 blocks in parallel require that

- all 15 are mined by different miners

- no miner learns about the other 14 blocks before mining his own weak block

15 blocks in 2 seconds, where the target interval of a single block is about 10 seconds is very unlikely.

Oh, I understand your concern.

What I meant is a scenario, right after summary block has been confirmed, where no weak blocks were mined yet.

So in this scenario, weak blocks can be temporarily mined in parallel when there is no fresh weak block (all previous weak blocks already belong to a summary), can’t they?

I do admit that this scenario seems more rare after your explanation.

I see that my response above was “flagged by the community” and thus greyed out. What is going on? Is this the “you are posting too many links” heuristic?

Uh, must be an automatic action because your account is too new and posting links maybe?

I wouldn’t worry about it.

I see it grayed out too… not sure why flagged/auto flagged. But I can still view upon pressing the text.

K=1, T=75 still gets to a very incorrect number, though?

I brought this up in a chat I am in with the other paper co-authors. Our best guess is that we were using a slightly different parameterisation in the code that generated the table and failed to update the paper.

Rechecking, we are getting the same results as your table. This should be ok though. It does not change the point being made.

Fair enough. Perhaps the propagation impedance was higher, or otherwise, that leads to greater differences. The math is quite clear, though, that regardless of variables used (within bounds), Tailstorm is more effective.

@pkel @Griffith Is the paper here “Parallel Proof-of-Work with DAG-Style Voting & Targeted Reward Discounting” (I am coining as “NextGen TS” for now) the latest generally agreed-upon system for BU? If this is the latest, I want to take a deep dive into that now that TailStorm is largely understood.

(cc @ShadowOfHarbringer)

Thanks for the reminder, I will review it next.

It seems that TailStorm is not what I want since it changes the block reward. The block reward using TailStorm could be too random due to reward slashing (called “discount” in the paper) and to miners playing the algorithm for profit and it cannot be forseen just how much it will be played.

I will also publish a comparison between TailStorm and internal intermediate/sub blocks soon™.

I’m not sure this is game-able. Miners cannot earn more than the current block reward no matter what, though maybe some clever scheme to ensure more blocks. Doesn’t seem very likely though, particularly with orphan rate improvement. Actually is supposed to improve withholding. But also seems NextGen TS will further improve this. Will read the paper to learn more.

It’s absolutely gameable (not sure how precisely yet, but it is) for a simple reason.

You get slashed (discounted) for every block that is not mined in series but in parallel.

Bitcoin(Cash) has the advantage of being a battle tested algorithm that has been gamed for 14 years since inception, so we have real-life data to back it and we pretty much already know what can happen/will happen.

Such data does not exist for TailStorm, it will take several years to create it.

So it is pretty much absolutely certain that TailStorm would change the block reward and issuance. The question is how much - at this point impossible to predict.

The issue with this is that Bitcoin, without original issuance can be claimed to no longer be “Bitcoin” which is a very significant psychological/social cornerstone.

not the maximum per block – just the minimum. It is an incentive to improve selfish mining.

We changed the difficulty algorithm, we have 10-block finality – seems by the same logic those would make us not Bitcoin as well.

A reward scheme with the same fundamental principles seems to me like it would still be Bitcoin. And just because something was done originally, does not mean it is the best way forward. (this does not mean I am saying we 100% should change our current reward scheme). Bitcoin was meant to be improved (when it makes sense, of course).

Anyways – let’s both take a read of NextGen TS and see what that brings.

Significantly chainging the issuance is not part of the original contract of “Bitcoin”.

So even for me, this is too much. It could turn out that issuance will change by for example 10% as the result of mining parallel blocks.

This is not really acceptable.

It may seem this way to you, but it may not seem this way to hundreds of millions of different people.

I am not saying it makes sense, I am saying this is how it is with humans.