Can you please restate the question? I could not follow.

Sure, bro:

In above ^ I assume that fresh weak blocks mined after summary has been created, depend on (are hashed after/linked) latest confirmed summary.

I’m still not certain I understand the issuance speed issue you reference. The speed of issuance cannot be greater than it currently is. It can be less than or equal to. Do you foresee some case where that would not be accurate?

Yes, slower issuance can also be a problem, because it breaks the original “Bitcoin” contract. Everybody who invested into Bitcoin(Cash) years ago, expect a certain standard to be kept.

If you break that standard, you also break that contract. At this point the people who signed in for that contract by using Bitcoin(Cash) can make a claim that they were cheated in a way.

It is also possible, that due to miner games, the issuance will speed up. How? We can never know until we test the new technology for 4-5 years to find out.

This in a sense has already happened. Look how miners currently play the system. We are already a significant number of blocks ahead of BTC. Not only that, but miners currently game the system in a sense. They’ll move a bunch of hash over to claim a bunch of reward then move the hash off and let everyone suffer from the higher difficulty as it adjusts back down.

This scheme would actually go a long way towards minimizing those short term fluctuations. Have you considered this?

EDIT: upon rechecking, it seems the block difference may have tapered somewhat over the past year or two. We are 2,300 blocks ahead of BTC (so roughly 10 days worth). But this is still not small. And yes, much came from the hash war/defense against BSV. Regardless, though, miners still game the system and we get unhappy users dealing with 1hr+ single confirmation times, on a relatively frequent basis.

This was in response to the UTXO spending problem, right?

I then replied that

I do not understand what you mean. Is this a problem or just an observation? In Tailstorm, the summary follows deterministically from the set of weak blocks it confirms. As soon as a miner sees k weak blocks confirming the previous summary, he can (re)produce the summary and start mining on the first block confirming this summary. Other miners can and will do the same, so it may happen that two weak blocks are mined at roughly the same time (within a single propagation delay). The system allows to merge these blocks into the same (upcoming) summary, both will get their share of reward and both contribute their transactions to the ledger. There is nothing special about fresh summaries without confirming subblocks, as you seem to be implying. The same can happen anywhere in the epoch, except at the very end: when two miners produce a k-th weak block at roughly the same time (before learning of the other k-th block) such that there are now k+1 weak blocks confirming the last summary. Then one weak block will be orphaned.

This all depends on the probability/frequency that two blocks are mined at roughly the same time; while the first block has not yet propagated to the miner of the second block. This depends on how you set the weak block size and interval. You can calculate the probability in theoretical models (above, Tailstorm paper, Storm paper), or you can look at ETH PoW uncle rate for an evidence-based estimate. My conclusion is that the overhead in chain size is manageable.

Does this address your question?

Yes it does, thanks.

Well done.

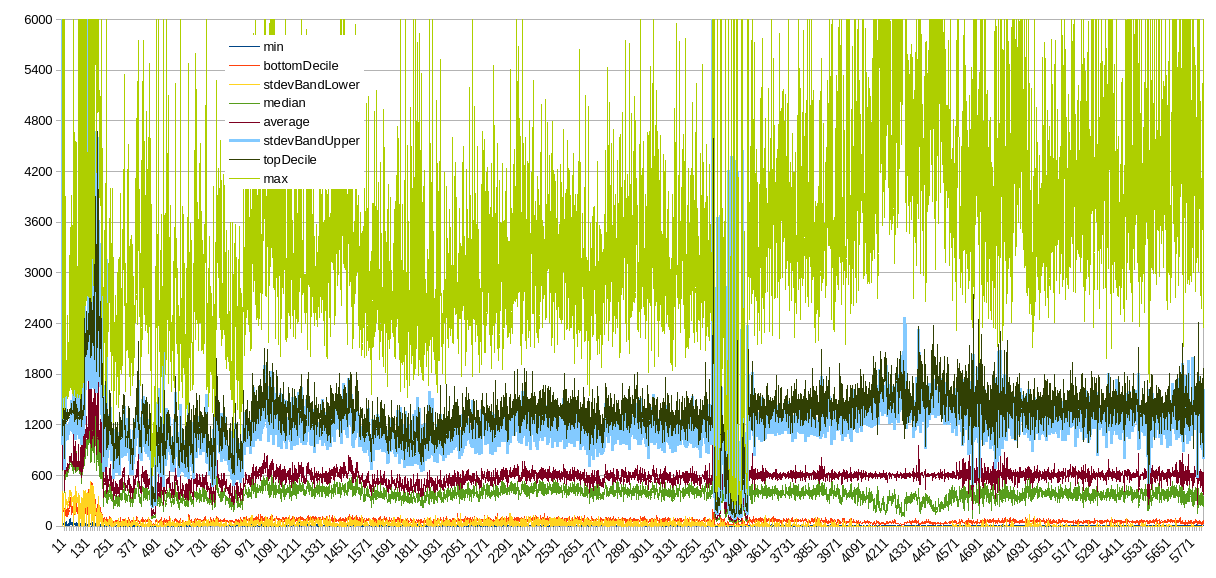

This was mostly due to '17 fork EDAA, see below chart (y- block time in seconds, x- block height/144) where the anomaly is easy to spot.

Now, issuance is on schedule but we’ll likely be dragging the advantage forever.

Did this break the promise of Bitcoin?

Not saying it broke any promise – just stating that the system is currently gamed in response to Shadow’s suggestion that it could be gamed (and seeming supposition that it isn’t now). The current gaming could very likely be mitigated (at least to a decent extent) with a system like TailStorm or NextGen TS.

I am sorry, but you misunderstood my post.

I did not say it will be gamed. I said that it will be gamed much more. We already know how BCH’s PoW can be gamed, we pretty much know what to expect. The same cannot be said about TailStorm.

It’s clearly visible from the context of my multiple posts.

This does not mean I give up on the idea, no such thing is gonna happen. I do not give up easily.

I will either find a way to make this happen or I will design one myself.

It’s not impossible I could even code it, what is slowing me down is my real-life troubles.

Gamed “much more.” Have you provided any method for them doing this that hasn’t been debunked? Sorry if I missed something. I don’t see (and just because I don’t see doesn’t mean it doesn’t exist) any scheme for it to be gamed further. The math/mechanism is not all that complicated.

By the same logic, we don’t know how much more BCH’s system can be gamed – there are other things that maybe haven’t been discovered or used yet, no?

Make what happen in particular? Weak blocks? Just asking for clarification.

No, again you skimmed some of my post.

I directly stated that it is impossible.

It is only possible with BCH’s current PoW since we have real-life evidence. It is impossible to procure such evidence for TailStorm without actually implementing TailStorm on a LIVE🔴 system.

So rationally speaking, such BETA testing is best done on another (preferably newly made) network rather than on a network that is supposed to be functional money with 100% uptime and no surprises.

This I don’t disagree with. Nexa will be implementing soon iirc. Will be a great testing ground. Soon afterwards would be cool to have a BCH testnet with it as well.

@ShadowOfHarbringer have you had a change to read NextGen TS paper yet? I’m reading as we speak. Quite interesting. A bit more dense so will likely end up re-reading a few times.

I will be doing the one called “Parallel Proof-of-Work with DAG-Style” next.

Can we get @pkel 's posts unflagged please?

He was probaby auto-flagged because he posted to many links in the beginning.

late to this discussion, but I found this statement of the OP… quite confusing.

considering that 0-conf has NOT been adopted by a single BCH-supporting Exchange or BCH-supporting Online Merchant (that i’m aware of), exactly what are the 99% of users that are being served well by 0-conf today?

(i regularly have to wait upwards of 60+ minutes for a confirmation at BCH ATMs before I can be on my way)

OP, could you please clarify your assertion with links to just a few of the 99% of 0-conf use-cases currently out there in the wild? (some of us may not be aware of)

hmmm, so I read this as suggesting that “weak blocks” would ONLY be a “superficial” change to the Bitcoin Cash consensus.

OP, is that correct?

So you DO NOT actually expect Exchanges and Online Merchants to (all-of-a-sudden) start recognizing BCH now offers “Instant Finality” and begin dramatically improving the UX of its users (that’s already enjoyed by many other networks)??

(OP definitely no rush, i’ve been asking these questions for yrs now, so plz take your time)

cheers!

This is not the fault of the technology. This is the fault of the ATMs and exchanges themselves.

They refuse to acknowledge the working technology and instead judge BCH by nonsense BTC’s standards.

It’s politics.

Discussing politics is not the reason for this post, this is not the place for it, so I am not gonna do that here. We can do it on Telegram.

This is not correct.

I listed psychological reason as one of the reasons in the list, which implies ORAND operator not XOR operator or picking just 1 from the list.

Psychological reasons are “added benefit”, they do not exclude other benefits.