Seems to me that if there’s going to be any limit then it needs to be made part of the formal protocol specification and then there needs to be some way of detecting that the limit has been hit when submitting transactions to the mempool - a problem I suspect is far more difficult than it sounds at face value given the nature of decentralized systems.

We’re presently building a demonstration app that consists of an auction tying FT-SLPs & BCH values to NFT-SLPs representing the items being bid on. Given that it can be 10-45 minutes between blocks and no guarantees that all the transactions get through in the next block, it seems that hitting such a limit would be a common occurrence given such a use case.

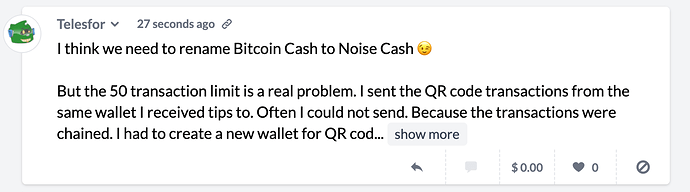

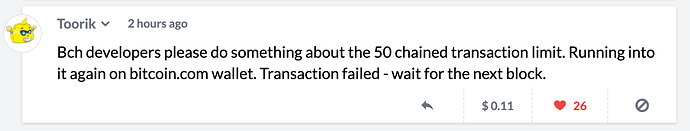

BCH claims to have fixed the BTC scalability issue. If mempool transaction chains are this shallow then it’s fairly apparent that were BCH to ever be tested by getting real transaction volumes that would stress BTC, BCH might just fall on its face.

As a counter-point, given the cheapness of BCH transactions, is it possible that not having a limit creates some kind of potential DOS attack? Whatever the limit it, it should be so high that hitting it would be a significant investment of value to meet and a large memory load on the node so as to be impractical before transactions start getting rejected or, worse yet, silently fail on the network.

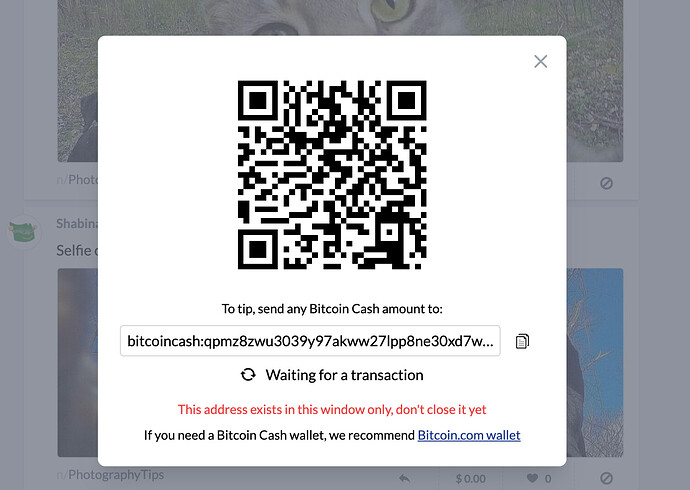

@emergent_reasons when you hit this limit, what was the behavior that you experienced? Was your attempt to post the transaction blocked, did transactions just fail to get included, or something else?