Making this space for RFC until someone creates a more concrete proposal.

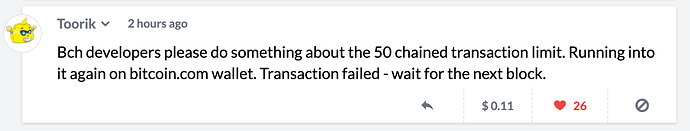

From read.cash:

Hi everybody. So, today we spent countless hours trying to fight the tipping problem on noise.cash. Basically, we already knew about 50-chained-tx limit, so we had a HD wallet set on server, with 10 wallets sending tips. Then we noticed too-long-mempool and growing queue of tips. WTF… how come? We have 1800 tips per hour and 10 wallets = 10 wallets * 50 = 500 tx/10 minutes. We split the coins to 50 outputs and that made it totally unspendable. WTF2… I thought that 50 limit applies to number of transactions, not number of outputs in parent transactions. Ok, JT Freeman explained it to me.

Then comes the HathorMM, so even after adding coins and splitting to 50 outputs, we had to wait for a block. HathorMM mines empty blocks. So, we’re sitting for hours, just looking at this non-stop: https://i.imgur.com/0xdvaPX.png https://i.imgur.com/tvU0jL9.png

What I want to say… noise.cash is a tiny project. And we’ve got stuck BADLY with 50-tx-limit. I’m developing for BCH for 2.5 years I think… And I haven’t foreseen these problems. What do we expect from NEW guys? What if a unicorn comes to BCH and creates a huge enterprice… that will get stuck with 50 tx/10 minutes… even worse - maybe with ONE TX with 50 outputs per 10 minutes… or 60 minutes if HathorMM/Eurosomething… continues to do their crap and miners continue to do nothign about it.

What I want to say, dear Freetrader, @im_uname , @emergentreasons [note from ER: this was originally posted in a BCHN group] PLEASE PLEASE make it a bit more high priority. If I could help with something, let me know…

Thank you!

Just to clarify, some people want to accuse us of faking transaction volumes, tips, etc… totally not the case. It’s what real users send to real users. But since we only have tips from Marc de Mesel implemented (noise.cash just exploded in popularity, we didn’t have time to implement client-side wallets), we’re the only ones sending tips to users (in database), but then users direct whom THEY want to tip - that’s the on-chain tx (actually two, since we give a part back to the tipper). People went crazy and started tipping $0.01 or about that. That’s why we suddendly have tens of thousands of transactions… and all these needs.

At peak we had 13,000 tips in our queue blocked by 50 chained-limit.

We now worked around the problem by implementing batching… but really, I never thought I’d see the day when I’ll HAVE to do batching in Bitcoin Cash, it just sucks.

I just wanted to voice the opinion that 50 tx problem is not just something that doesn’t concern anyone. It’ll hurt these who need it the most - active growing businesses. And it’ll hit them unexpectedly. Like it did with us.

Thanks everyone!

Adding some further questions / comments from discussion on Telegram (telegram-bridge channel on BCHN Slack)

readdotcash asked:

Could somebody correct my understanding, please?

the limit is not like “50 unconfirmed transactions in a chain”, it’s more like “there are 50 outputs in unconfirmed transactions in your parent chain”

So, basically, 1-to-50 single transaction exhausts the limit immediately

How about 1-to-10, then one of thouse outputs sends to 40? does that exhaust the limit?

Are siblings involved? so 1-to-10, then two of these outputs send 1-to-20 - will I be able to spend these outputs or other 8 outputs?

and

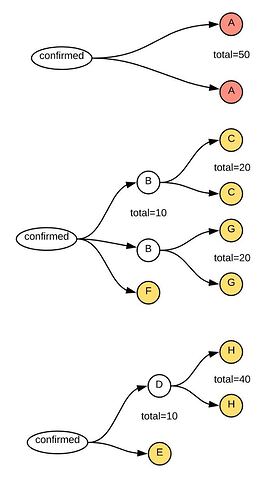

the question, in more graphical form:

to simplify the question - I assume the reds are unspendable, are any of yellow ones spendable? circle means “unconfirmed”

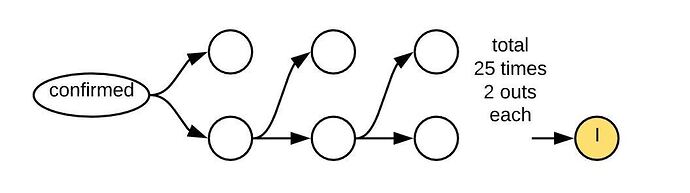

Here’s one more - the one we actually got stuck with yesterday

This does sound very bad, but we have to remember that this is a not very standard situation. Its like the moons aligned.

- A project that was meant to start small got sudden massive influx of users.

- The users pay on-chain, but there are no user-specific wallets yet. The project didn’t get to this phase yet.

- You now have thousands of users for each custodial wallet. This is an extreme, as we can all agree.

If the project had not grown so fast, it would not have been an issue.

If the users had their own wallet with their own UTXOs, it would not have been an issue.

If the transactions had happened off-chain (its custodial anyway), it would not have happened.

I feel the pain of the guy, and I’m absolutely in favour of improving the limits there. In the mean time I suggest that he limits the influx of new users (a common method for in-beta services). He can also work on having many more UTXOs to spent in his hot-wallet, approximately based on what individual wallets would generate in UTXOs.

Again, I do agree that the need for increasing the limit exists.

The first thing to realize is that this is about the mempool limits and these are the defaults that most public nodes follow.

Exeeding these limits is not an isssue at the blockchain level. It just is for publicly available mempools.

A private mempool (BU has some neat code for this) that has these limits removed is by far the easiest way to sidestep the entire issue. A tx generator sends those to a private BU node configured without the limits, and AFAIR BU will re-broadcast those transactions when a block comes in and more become acceptable.

Armed with the basic knowledge that this is about a mempool, and its default settings, we can conclude a bit more.

-

A single transaction spending only confirmed outputs, but having many outputs (many more than 50 is allowed) will not be an issue.

The reason is that the test-moment is on mempool-accept and the only tihng that is counted is UTXOs already in the mempool.

The first (A) reds makes no sense as you can only ever spend a UTXO once. A UTXO is not an address. -

the counting is about a direct chain from your new TX to outputs that are confirmed.

You track the chain from your inputs to transactions, following the chain via their inputs. So I’m not sure what the graph B/F/G is about, it doesn’t make sense in this situation since a transaction that is not directly in my chain of parents is not relevant to the decision making process, to the best of my knowledge.

I interpreted the “A” circles in the graph as being a spend to some address, accompanied by a change address, all in the same transaction (referred to as “A”).

So I think we’re understanding his graphs differently.

Nevertheless, I think we agree on that “A” should be spendable under current policy limit of 50 in BCHN and other clients that use the same limit. Tests made have already confirmed this, so the real question is what caused the condition of “A” being blocked as unspendable, as observed by readcash.

Seems to me that if there’s going to be any limit then it needs to be made part of the formal protocol specification and then there needs to be some way of detecting that the limit has been hit when submitting transactions to the mempool - a problem I suspect is far more difficult than it sounds at face value given the nature of decentralized systems.

We’re presently building a demonstration app that consists of an auction tying FT-SLPs & BCH values to NFT-SLPs representing the items being bid on. Given that it can be 10-45 minutes between blocks and no guarantees that all the transactions get through in the next block, it seems that hitting such a limit would be a common occurrence given such a use case.

BCH claims to have fixed the BTC scalability issue. If mempool transaction chains are this shallow then it’s fairly apparent that were BCH to ever be tested by getting real transaction volumes that would stress BTC, BCH might just fall on its face.

As a counter-point, given the cheapness of BCH transactions, is it possible that not having a limit creates some kind of potential DOS attack? Whatever the limit it, it should be so high that hitting it would be a significant investment of value to meet and a large memory load on the node so as to be impractical before transactions start getting rejected or, worse yet, silently fail on the network.

@emergent_reasons when you hit this limit, what was the behavior that you experienced? Was your attempt to post the transaction blocked, did transactions just fail to get included, or something else?

The design of the mempool, specifically with regards to Child-pays-for-parent, was the reason for there being a cost.

The cost without CPFP is practically nil. And doesn’t seem like people are actually using that feature right now: Researching CPFP with on-chain history

@tom sounds like you’re arguing for a (temporary?) workaround for the app running a private node which sounds fairly reasonable. I’m curious - not knowing all the details about how these mempools interoperate, would a node with a much larger mempool be able to automatically resolve these issues when gossiping to the other nodes which would start rejecting his transactions when the limit gets hit? After those txs get written to the ledger and confirmed, would they just start accepting the txs that were violating the limits earlier or would the private node have to be intelligent about this limitation and back-off/retry via extra coded efforts?

All Implementations I know do not retry or back-off.

When a transaction gets first is seen by a node it will offer it to its peers causing a broadcast effect. It will not volunteer knowledge of this transaction to its peers at any time later.

So if there’s not going to be a retry - how will the txs rejected by the public nodes earlier eventually make it into the confirmed blocks from the miners? Am I missing something obvious?

A wallet that offers a transaction to a full node (and thus effectively to a mempool) will get a rejection message if that is appropriate. And the wallet can use the rejection message (see image links from OP) to figure out what to do.

The question you ask is a larger one that has caused a lot of contention and I addressed it in a separate post: Thinking about TX propagation

Tom, you misunderstand it. These are tips that go from us to users. User-specific wallets won’t solve this, as this logic works as planned. User-specific wallets will ADD to this situation, not improve it.

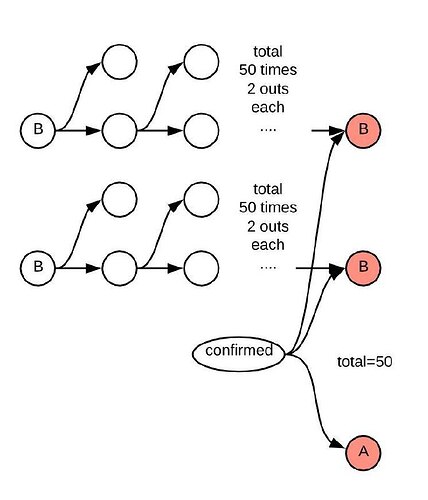

Again, it’s not “custodial” wallets. It’s wallets of Marc de Mesel sending tips to users own (non-custodial) addresses. If there was no 50-tx limit, I would have written, “it’s A WALLET of Marc de Mesel sending tips to users”, there’s really no reason for existence of 50 wallets of Marc, except for the 50tx limit.

Yes, the circles are outputs. So, if a line goes out of “confirmed” to a bunch of "A"s - that’s one transaction with “confirmed” as input and bunch of "A"s as outputs.

Actually, that mirrors my understanding.

That is incorrect. A user specific wallet implies usser specific UTXOs, and thus independent chains that don’t have this issue because you just spread the 50 from a small number of chained-transactions to a very large number.

Yes, we have that. Users enter their own wallets. But in order to get money to their wallets, we need to send them from Marc’s wallet to their user-specific wallets. And getting money to their user-specific wallet is exactly the problem I’m pointing out here.

Basically, your solution is “just create gazillions of UTXOs for the future and spend them”. This is no better solution than our current 100 wallets. Basically, your suggestion is that we should create 30,000 (number of users) wallets instead of 100. I’m afraid to imagine how long “getBalance” for 30,000 wallets will take. Increasing 1000 per DAY.

I’m not happy to have to be “that guy”, but this is really a unique problem on your side, while it certainly is made more difficult due to the limit (which I’m all in favour of removing, but it will take time!), it is solvable on your side.

You say that users enter their own wallet. Your explanation clearly shows how this is an approximation. You don’t have 30k wallets for 30k users. While a read.cash does in fact have nearly the same number of wallets as it has users.

A noise.cash that is feature-complete would have exactly that solution, yes. Users would sign the tips in their webbrowser-side wallet and spend their own money from their own wallet. You would not do any signing on your server and the problem would not exist.

I’m impressed you grew your userbase that fast, and the volume on-chain is equally impressive, we need to be creative to make that work with your current setup.

User-wallets are stateful for this exact reason. They just update once a block and remember all the UTXOs they have so you don’t have this scaling issue. Bitcoin Cash is decentralized, its not a bunch of central servers doing all the work, the users-wallets need to do some work to keep things scalable. That is just the nature of the beast.

I think the bottom line is that you got yourself into the business of handing a huge number of transactions and you need to have the infrastructure for that. Which includes a proper wallet solution (=stateful) and a full node. Using such a setup its really not too hard to have a stateful wallet that keeps the needed number of UTXOs on hand, splitting coins every block to make sure you have enough for the next block. That stateful wallet will be able to give you an instant answer in the ‘getBalance’ call since that is its purpose for being, it already knows your private balance.

Tom, you misunderstand how it works. You assume that when user A sends a FreeTip to user B that user A has money. From that misunderstanding you conclude that “we” somehow took control of user A’s money. This is incorrect, user A has NO money to start with. I’ll draw a few diagrams to explain.

EDIT: I can’t reply because the forum software tells me that I’m not allowed to post multiple images… pinged Leonardo di Marco, waiting for the resolution.