Posted on Reddit too, just copying here:

Very impressive work, thank you for your time and energy on this /u/bitcoincashautist!

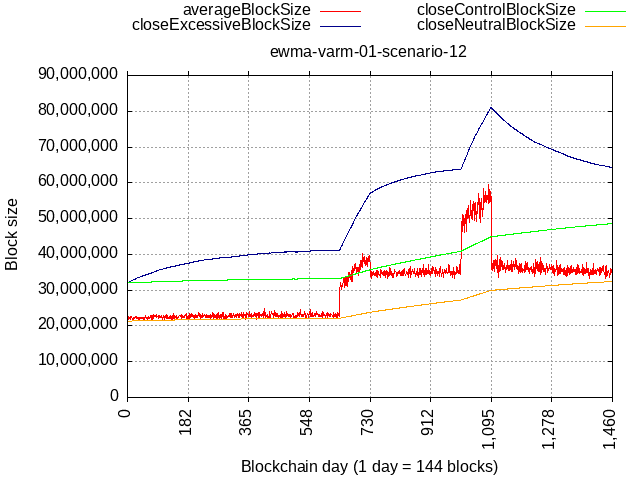

I think this is already a huge improvement from a fixed limit, and adopting a dynamic limit doesn’t preclude future CHIPs from occasional bumping the minimum cap to 64MB, 128MB, 256MB, etc.

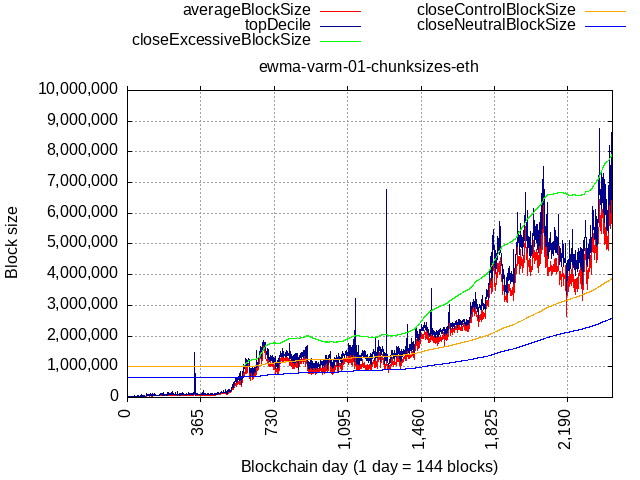

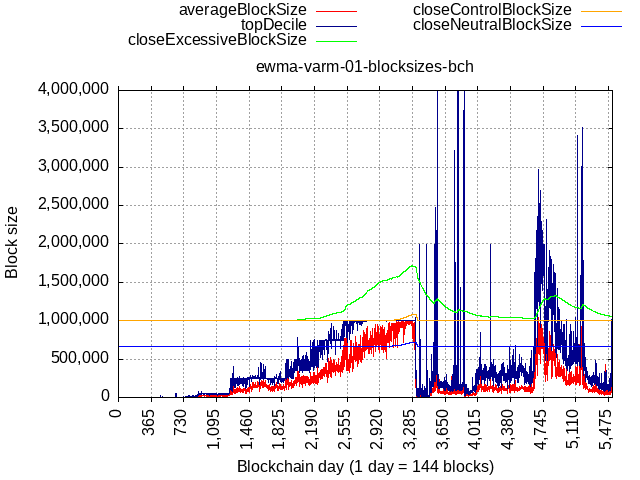

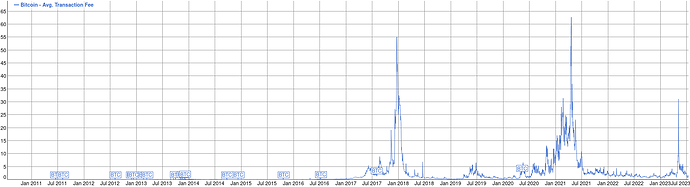

I’m most focused on development of applications and services (primarily Chaingraph, Libauth, and Bitauth IDE) where raising the block size limit imposes serious costs in development time, operating expenses, and product capability. Even if hardware and software improvements technically enable higher limits, raising limits too far in advance of real usage forces entrepreneurs to wastefully redirect investment away from core products and user-facing development. This is my primary concern in evaluating any block size increase, and the proposed algorithm correctly measures and minimizes that potential waste.

As has been mentioned elsewhere in this thread, “potential capacity” (of reasonably-accessible hardware/software) is another metric which should inform block size. While excessive unused capacity imposes costs on entrepreneurs, insufficient unused capacity risks driving usage to alternative networks. (Not as significantly as insufficient total capacity as prior to the BTC/BCH split, but the availability of unused capacity improves reliability and may give organizations greater confidence in successfully launching products/services.)

Potential capacity cannot be measured from on-chain data, and it’s not even possible to definitively forecast: potential capacity must aggregate knowledge about the activity levels of alternative networks (both centralized and decentralized), future development in hardware/software/connectivity, the continued predictiveness of observations like Moore’s Law and Nielsen’s Law, and availability of capital (a global recession may limit widespread access to the newest technology, straining censorship resistance). We could make educated guesses about potential capacity and encode them in a time-based upgrade schedule, but no such schedule can be definitively correct. I expect Bitcoin Cash’s current strategy of manual forecasting, consensus-building, and one-off increases may be “as good as it gets” on this topic (and in the future could be assisted by prediction markets).

Fortunately, capacity usage is a reasonable proxy for potential capacity if the network is organically growing, so with a capacity usage-based algorithm, it’s possible we won’t even need any future one-off increases.

Given the choice, I prefer systems be designed to “default alive” rather than require future effort to keep them online. This algorithm could reasonably get us to universal adoption without further intervention while avoiding excessive waste in provisioning unused capacity. I’ll have to review the constants more deeply once it’s been implemented in some nodes and I’ve had the chance to implement it in my own software, but I’ll say I’m excited about this CHIP and look forward to seeing development continue!