Hey Griffith, nice to see you again!

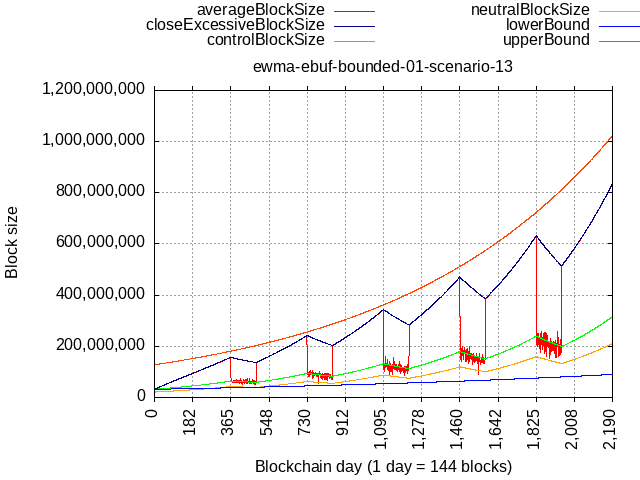

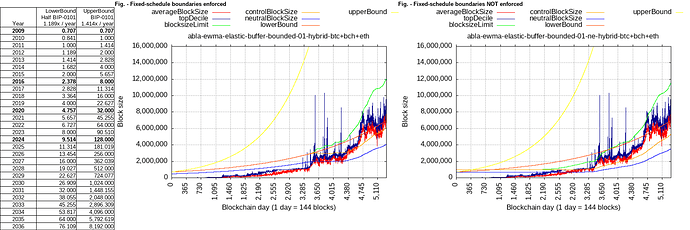

Wdym, where did you see that in that commit? The current proposal doesn’t have the 2 fixed-schedule “guardrail” curves, it only has the flat 32 MB as the flat floor, and the algorithm itself has a natural boundary since it reaches max. rate (2x/yr) at 100% full 100% of the time, which would be faster than BIP101 curve, however, consider what it would take to intercept it:

Cycle:

- 8 months 100% full, 100% of the time (max. rate)

- 4 months 100% full, 33% of the time (falling down)

The gap widens with time because during dips in activity, the fixed-schedule curve races on anyway and increases the distance.

If we reduce the gap duration to 3 months, i.e. a cycle of:

- 9 months 100% full, 100% of the time (max. rate)

- 3 months 100% full, 33% of the time (falling down

then sure, it could catch up, but is it even possible in practice to sustain 100/100, all it takes is minority hash-rate to defect or user activity to dip for whatever reason.

It would also take infinite supply of transactions to maintain the 100/100 load, from where would they come at those ever-increasing rates?

If it’s “only” 90% full 90% of the time then such load would have to be sustained for more than 4 years to catch up:

I hope this demonstrates why it’s “safe” as it is, with max. rate of 2x/yr.

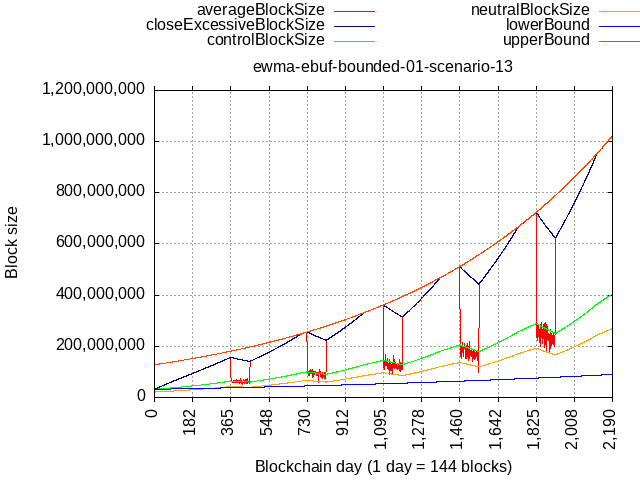

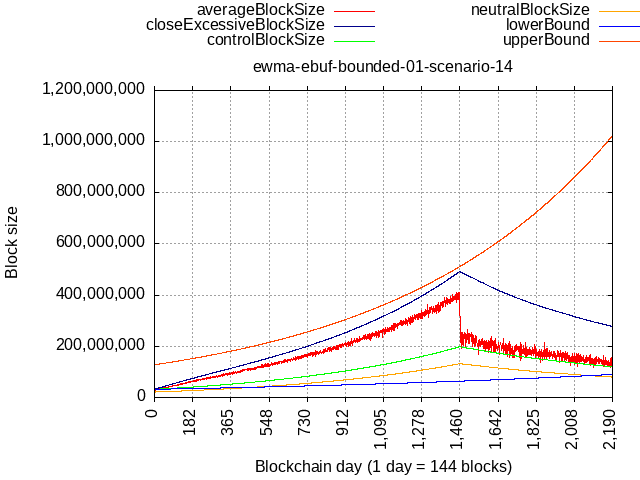

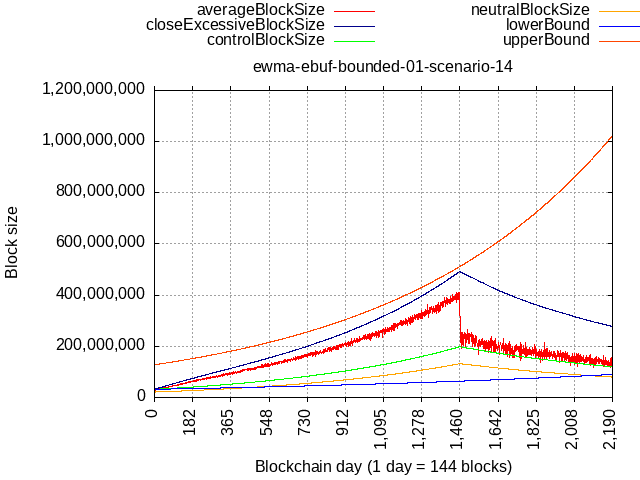

Anyway, I made a new branch and pushed the change I used to generate these plots there, this is how implementation would look like with both guardrails added.

I consider it useless because in practice and after few years it would likely race ahead so much that it becomes impossible to ever hit it even with algo’s faster rates, so what purpose would it serve?

I will just repeat J. Toomim’s comment from Reddit:

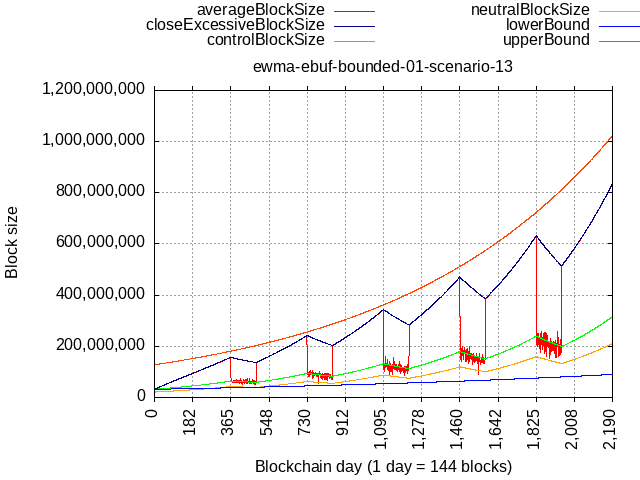

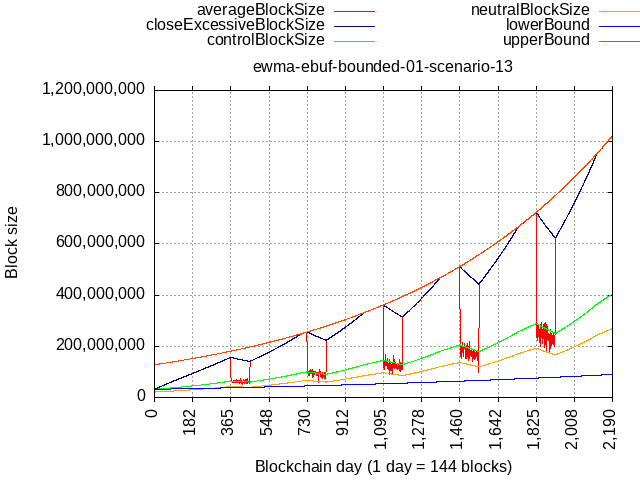

Should blocks end up being consistently full, this algorithm could result in exponential growth that’s up to 2x faster than BIP101. I think that’s kinda fast, and it makes me a bit uncomfortable, but if we all hustled to beef up the network and optimize the code, we could probably sustain that growth rate for a few years, and would probably have enough time to override the algorithm and slow it down if needed, so it’s not atrocious.

What he was proposing is to replace the flat 32 MB floor value, with a slow-moving fixed-schedule curve:

So my criticism is that in the absence of demand, this algorithm is way too slow (i.e. zero growth)

This was his suggestion and explanation on why he consideres 2x/yr max. rate as something which is probably OK (and I hope my plots above demonstrate that it will be OK):

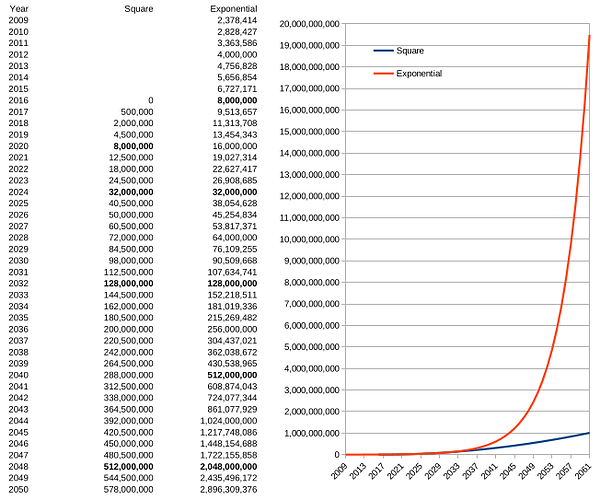

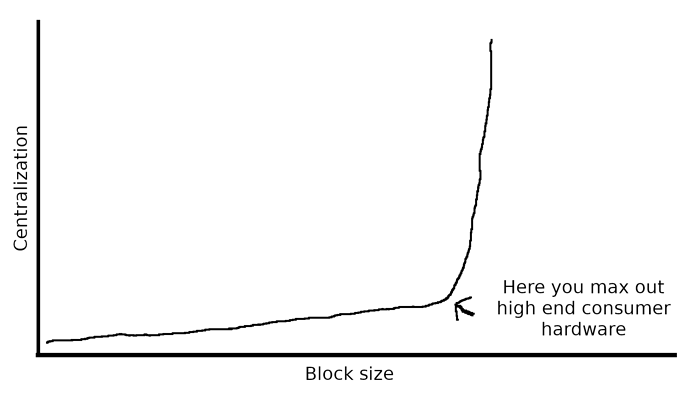

My suggestion was actually to bound it between half BIP101’s rate and double BIP101’s rate, with the caveat that the upper bound (a) is contingent upon sustained demand, and (b) the upper bound curve originates at the time at which sustained demand begins, not at 2016. In other words, the maximum growth rate for the demand response element would be 2x/year.

I specified it this way because I think that BIP101’s growth rate is a pretty close estimate of actual capacity growth, so the BIP101 curve itself should represent the center of the range of possible block size limits given different demand trajectories.

(But given that these are exponential curves, 2x-BIP101 and 0.5x-BIP101 might be too extreme, so we could also consider something like 3x/2 and 2x/3 rates instead.)

If there were demand for 8 GB blocks and a corresponding amount of funding for skilled developer-hours to fully parallelize and UDP-ize the software and protocol, we could have BCH ready to do 8 GB blocks by 2026 or 2028. BIP101’s 2036 date is pretty conservative relative to a scenario in which there’s a lot of urgency for us to scale. At the same time, if we don’t parallelize, we probably won’t be able to handle 8 GB blocks by 2036, so BIP101 is a bit optimistic relative to a scenario in which BCH’s status is merely quo. (Part of my hope is that by adopting BIP101, we will set reasonable but strong expectations for node scaling, and that will banish complacency on performance issues from full node dev teams, so this optimism relative to status-quo development is a feature, not a bug.)