It would just be a milestone on the path of getting to 1 GB. From breaking 30 MB, it could take 4-5 years to get 1 GB. 2 market cycles.

explicity #2 but implicity #1

cool. let’s implement it your way and move forward. I said my bit.

LOL FML I can’t keep my fool mouth shut, SMH at me. oh well.

Today, BCH offers a Guaranteed Minimum Capacity of 32MB.

What will be the Guaranteed Minimum Capacity in 2033 (10yrs) and 2044 (20yrs) under each proposal and why would one proposal offer only 32MB instead of a larger Guaranteed Minimum?

Maybe there’s a good reason why we shouldn’t be improving this core metric that I hadn’t considered.

The guarantee will not be static, more like: we guarantee we will expand the capacity once whatever we offer “now” gets close to being used up.

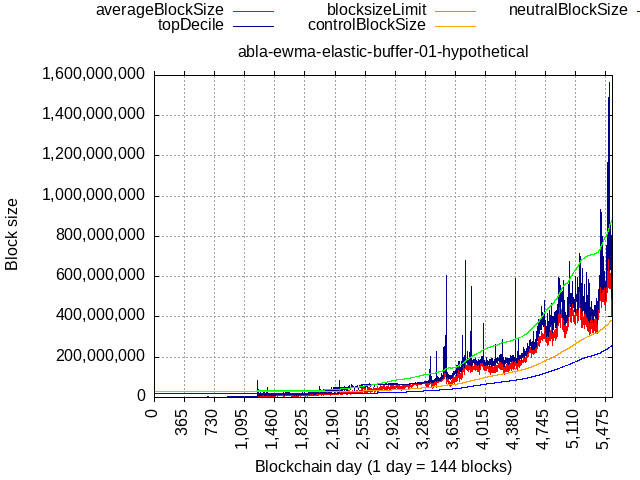

It could look like this (about 180 MB in 2033, 800 MB in 2038). I simply multiplied the empirical combined sizes (BTC+LTC+ETH+BCH) with 64 and initialized the algo with 32 MB.

It could grow faster – if we do the work to bring in the volume.

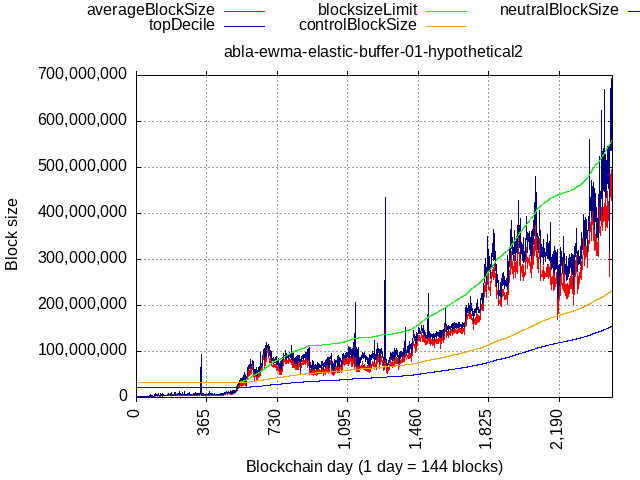

Ethereum data x64, with 32 MB flat floor; 550 MB in 2030.

FWIW, that’s not the guaranteed minimum. The guaranteed minimum is the number that, no matter what happens, will be in force. Which is 32MB. You’re talking about the allowed growth rate.

FWIW.

But it is not really a strong guarantee. It’s just a guarantee that nodes will accept such blocks should they be mined, not that it would all go smooth in broader sense if we went from 0 to 32 overnight. Current capacity is not 32 MB but the aggregate of individual miners self-limits x their relative hashrate. It’s not possible to make guarantees with a decentralized network, we have to trust in the incentives - that miners would lift their self-limits to 32 MB out of their self interest. Doesn’t mean there wouldn’t be some pain points in between then and now.

Speaking of guarantees, what guarantees are there that hashrate will actually move their self-limit as soon as 8 MB starts getting hit often? What guarantees that some apps and infra won’t face downtime should someone flip the switch and churn out, say, 20 MB without stopping? I imagine volunteer run instances of Sickpig’s explorer would go down, some Electrum servers would go down, maybe Paytaca’s new BCMR indexer would go down. If the 20 MB is result of spam then the price wouldn’t move even with TX volume, so from where would people pay for upgrade costs to get back online? Someone must pay for all the infra, the only place it can realistically come from is from BCH price appreciation, and some fresh money flooding our ecosystem.

This made me think it’s possible to make a slight adjustment to initialization and elastic buffer params, it should not start at 0, and maybe we can tolerate a bigger multiple at max. stretch if initialized differently - still allowing us to stay under short/mid-term potential capacity (256MB) and long-term projections (BIP101).

Great point. The block size limit should be lowered to below 20MB and frozen, since we are designing for those nodes, and cannot ever say for sure if they will be upgraded.

/s but only barely

I feel you but you’re reading it wrong, as if it’s somehow indicative of not believing we can scale. Sure we can, but everyone needs resources to do it, from where will those come? Who’s gonna invest resources way ahead of the network actually getting some traction and increased economic value? You think a fixed schedule will force people to do the work? I was triggered by your guarantees . There are no guarantees, best we can do is have faith in incentives and ensure that once incentives are there (network usage growing) that we won’t get stuck due to a stupid line of code.

Guys, polite reminder.

You’re in bikeshedding mode again.

We should really just get this done. Like really.

We worked it out in PMs, I added this paragraph to CHIP’s “evaluation of alternatives”

It will likely become reasonable to increase the “stand-by” capacity simply because it will become trivial to support it even if it will be orders of magnitude underutilized.

After all, that is the current state (few 100 kBs utilized of available 32 MB).

However, it is impossible to predict what will be trivial in the absence of network growth, and the argument here is that it should be left to good judgement of network participants, e.g. some future CHIP proposing to lift the floor value to 64 MB in 2028, instead of being delegated to a fixed schedule.

To code a fixed-schedule now would be scope creep from my PoV. The CHIP never set out to predict what people will do - but instead to react to what they’re actually doing, remove the risk of dead-lock, and count on the incentives of TX load “pushing” people to keep upgrading, while still being slow enough at the extremes that it would be manageable.

agreed with above & moving forward

my apologies to the group for being disruptive

No need to apologize. You can be more disruptive next year.

We do not need a perfect solution right now. We only need a very good solution.

Once it is “locked in” in the brains of the populace (and especially miners) as a default way forward, we can bikeshed improvements ad infinitum.

This morning I have reviewed the complete CHIP and suggested a number of typo / grammar corrections to @bitcoincashautist.

My two non-typo points that I raised were:

- I would love some numbers demonstrating that new fast sync requirements won’t be an issue for light wallets (very relevant for Selene Wallet). Data addition should be trivial for this, but it would be worth having some clarifying maths to back it up. As we’re already seeing enthusiastic Selene Wallet users in Venezuela with very poor download speeds and so sync times are a critical issue for them - obviously this is something we don’t want to compromise going forward.

- I think the part of the CHIP that goes into “borrowing” future capacity is a little unclear and could be rephrased.

That said, I think there’s been an immense amount of quality work done on this proposal, and I am in full support of it.

I agree heavily with @ShadowOfHarbringer’s points about social consensus, we shouldn’t let perfect be the enemy of the good (or in this case, already very very good) & activating this CHIP in the 2024 upgrade would be a massive step forward. Delaying it for a year seems foolhardy, it seems pretty much everyone is onboard with an algorithm in the first place so it’s much better to be thereafter discussing a change to the algorithm rather than whether or not to do an algorithm and if so which exact algorithm.

@Jessquit it’s not exactly a topic for this thread, but I’m not so much a fan of the “Untethered” idea. I think the marketing play on words is fine, but I am averse to this kind of ““limitless”” messaging because it strongly reminds me of the endless BSV arguments and opinions which always seem to come down to “Well now the Bitcoin blockchain has been UNLEASHED! And you will see the TRUE power of Bitcoin/the free market now that we have UNLEASHED it with no blocksize/data limits/consensus changes etc.”. Their arguments always come down to some naive lack of engineering tradeoffs or un-nuanced belief in the supposedly endless powers of “free markets”. In my mind, their advocacy always turns into what sounds like some kind of Dragon Ball Z villain (“haha, my true powers have been unveiled, NOW you’ll see what I can do”). They’ve been “unleashing” BSV for years, and it’s not impressing anyone lol. So I think we should avoid marketing BCH in any style reminiscent of that, because it tends to attract loud and opinionated people with a binary and uneducated view that any kind of sensible engineering is an affront to their free market sensibilities. But that’s a separate discussion to have, and I like that you’re thinking up new promotional ideas - let’s workshop some of this some more in another venue.

Oh, just discovered “Light wallets” affected by this change means full nodes syncing from a UTXO committment backwards, not pruned nodes and not SPV. That fixes my Selene Wallet concern entirely, hopefully the spec can be updated to avoid any confusion there.

That’s fine, nobody is required to use the idea. It’s there for people who find it useful.

Proposed final draft of Executive Summary online now:

- Done

- Done

- In progress

- Todo

- Todo

- Todo

“CHIP-2023-04 Adaptive Blocksize Limit Algorithm for Bitcoin Cash” (ac-0353f40e / Adaptive Blocksize Limit Algorithm for Bitcoin Cash · GitLab) is implementation ready. Note that CHIP title has been updated.

If anyone want to have a go at test implementation or just review the CHIP and state approval/abstention/disapproval activating the CHIP, now is the time!

To get a feel for how it works, I suggest check out the risks section first:

The CHIP has reference implementations both in C and C++, and a simple test suite that locally generates simple .csv test vectors, covering full range of inputs to the algorithm.

My endorsement post:

Blocksize Algorithm CHIP endorsement

I (and The Bitcoin Cash Podcast & Selene Wallet) wholly endorse CHIP-2023-04 Adaptive Blocksize Limit Algorithm for Bitcoin Cash for lock-in 15 November 2023. A carefully selected algorithm that responds to real network demand is an obvious improvement to relieve social burden of discussion around optimal blocksizes plus implementation costs & uncertainty around scaling for miners & node operators. There is also some benefit to the community signalling its commitment to scaling & refusal to repeat the historic delays resulting from previous blocksize increase contention.

The amount of work done by bitcoincashautist has been very impressive & inspiring. I refer to not only work on the spec itself but also on iteration from feedback & communicating with stakeholders to patiently address concerns across a variety of mediums. Having reviewed the CHIP thoroughly, I am convinced the chosen parameters accomodate edge cases in a technically sustainable manner.

It is a matter of some urgency to lock in this CHIP for November. This will solidify the social contract to scale the BCH blocksize as demand justifies it, all the way to global reserve currency status. Furthermore, it will free up the community zeitgeist to tackle new problems for the 2025 upgrade.

A blocksize algorithm implementation is a great step forward for the community. I look forward to this CHIP locking-in in November & going live in May 2024!

Jeremy