Privacy beyond encryption

Even if we had widespread encryption use, it’s important to understand that the current P2P protocol would still be possible for powerful network-layer attackers (local admin, ISPs, governments, etc.) to fingerprint – and even de-anonymize transaction broadcast origins – based on packet sizes and timings.

Handshake fingerprinting

BIP324 leaves room in the handshake protocol for “garbage” to allow later upgrades that introduce traffic shaping measures and confuse fingerprinting (make the connection look like some other, non-censored protocol). However, the BIP stops short of actually implementing such protections.

Libp2p would probably give us more of a head start than BIP324: much of our handshake(s) would already be confused with other libp2p-based protocols, and in practice e.g. the WebTransport protocol might already be hard to differentiate from other web traffic. (The underlying QUIC protocol is well designed to resist traffic analysis, with encrypted headers and more efficient loss recovery vs. TCP if the WebTransport isn’t downgraded to HTTP/2 due to UDP being blocked.)

While I don’t think fingerprint resistance is very high priority, if a low-cost change (simple implementation + minimal impact on latency and bandwidth) might reduce the effectiveness of attempting to block all BCH connections, that would probably be worth exploring.

For example, the final step of a hypothetical libp2p WebTransport + BCH handshake – the VERSION + VERACK back and forths – has very predictable timings and message lengths. Introducing random padding, slight delays, and/or otherwise muddying that signature could reduce an attacker’s certainty of identifying the connection as a BCH P2P connection.

Inspecting transaction propagation despite encryption

A far more critical weakness for practical privacy against powerful network-layer adversaries (local admin, ISPs, governments, etc.) is the predictability of timing and message lengths involved in transaction propagation.

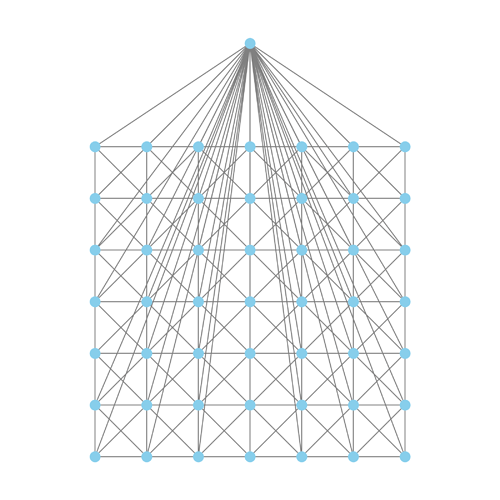

Fundamentally, all BCH transactions ultimately become public. Even if the attacker cannot break the encryption between honest BCH peers, if they can record the timings and sizes of packets traveling between connections, they can ultimately unwind the transaction propagation path (even back through a Clover, Dandelion++, etc. broadcast) to the node which first had the transaction. Encryption is practically useless in this scenario. (IMO, this is a weakness of BIP324 without other improvements.)

Padding-only Defenses Add Delay in Tor includes great background on this sort of attack against Tor. It notes (emphasis added):

[Website Fingerprinting] attacks use information about the timing, sequence, and volume of packets sent between a client and the Tor network to detect whether a client is downloading a targeted, or “monitored,” website.

These attacks have been found to be highly effective against Tor, identifying targeted websites with accuracy as high as 99% [6] under some conditions.

And a snippet I think we should keep in mind when reviewing solutions (below):

[…] it may be desirable to consider allowing cell delays, since adding padding already incurs latency, and cell delays may in fact reduce the resource stress caused by [Website Fingerprinting] defenses.

The worst offenders: INV and GETDATA

Encrypted transaction inspection is made much easier by the predictability of INV and GETDATA messages. Their distinct timing and length patterns allow the attacker to glean 1) that they’re looking at INV/GETDATA and not PTX or TX 2) the number of inventory items being advertised, and 3) the number of transactions being requested in response.

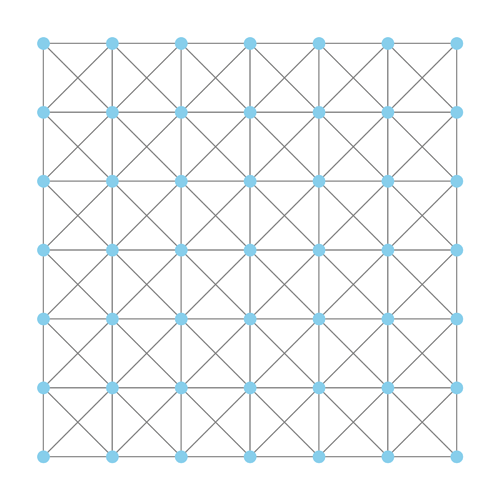

All this information significantly reduces the attacker’s uncertainty, even for attacks against Clover, Dandelion++, etc.:

-

INV and GETDATA messages can be more definitively filtered from timing data, clarifying the remaining picture of mostly TX and/or PTX message propagation,

-

INV messages which do not trigger GETDATA messages are easily distinguished from PTX messages,

- The remaining

TX timing data can even be more clearly unwound and labeled given knowledge of how many items were requested in each interaction – each time window is a sudoku puzzle that can be solved using the now-public transaction sizes from that time window.

Solutions

As mentioned above, users needing the best possible privacy should broadcast using Tor (but not necessarily listen exclusively via Tor), where their broadcast will also be obscure by Tor’s much larger real-time anonymity set than (I think) any cryptocurrency can currently offer.

As for improving the BCH P2P protocol’s resistance to timing analysis – I haven’t done enough review to be confident about any particular approach.

Right now, I’m thinking we should try to avoid:

-

Padding messages to fixed sizes – this would trade significant additional bandwidth costs for beyond-casual privacy against powerful network-layer adversaries. It’s not necessarily in every BCH user’s interest to make that trade. Instead, let BCH specialize at being efficient money, and users who need that additional privacy should use BCH through a network that specializes in privacy (Tor, I2P, etc.)

-

Decoy traffic – same efficiency for privacy tradeoff: we get the best of both worlds by being as efficient as possible and letting users choose to use a general privacy network if they need it.

-

Meaningful delays in the typical user experience – as mentioned above, I think we should keep the common path free of artificial delays, i.e.

PTX messages should be as fast as possible, offering casual privacy without much impact to user-perceived speed. Ultimately, this increases the anonymity set size by making it less annoying for users/wallets who don’t really care to leave enabled.

Admittedly, this would leave our toolbox quite empty.

One option to possibly strengthen Clover against this sort of analysis (at the cost of broadcast latency) would be to instead advertise “PTX transactions” as a new inventory type, having nodes fetch and re-advertise them in the same way as TX (the 3-step INV + GETDATA + TX pattern is obviously different than 1-way PTX broadcasts). I don’t know if the Clover authors considered that and decided against it (I’ve reached out to ask), but it’s probably worth testing how badly it impacts latency. If we could do it while keeping typical PTX latency under maybe ~10s, it’s probably worthwhile. Otherwise, maybe we could explore making “DTX transactions” work via INV broadcast (so PTX is fast + casual privacy, while DTX is the dragnet-resistant option).

I’d be very interested in others’ thoughts on INV and GETDATA lengths/timings.

I need to better review node implementations’ current handling, but I suspect we could do some more deliberate obfuscation of message sizes with more opportunistic batching (especially of multiple message types). Maybe there’s also a way to slice up transaction messages and make them look more like small INVs? (But maybe just PTX and/or DTX if that would slow down TX propagation?)

)

)