Thanks for digging into this @rnbrady! Since you answered your own questions, I’ll just add some comments:

An anonymity set of 2 is pretty dismal, and that’s in the best case (assuming the user didn’t send it straight to one of the attacker’s nodes) – for low-resource attackers – before other attacks/analysis.

E.g. if the transaction spends a CashFusion output, and you’re broadcasting using the same node or Fulcrum server you were using before the CashFusion – you’ve probably helped attackers cross off a lot of valid partitions (again, before other attacks). If many CashFusion inputs/outputs are compromised in this same way, the whole CashFusion is probably compromised.

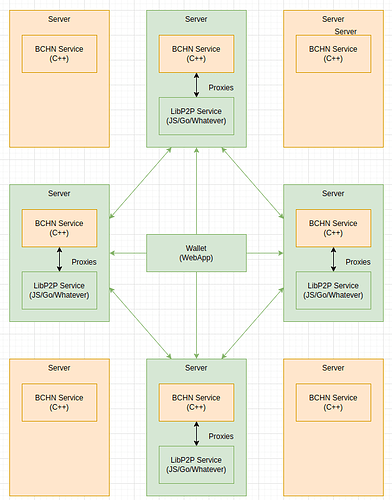

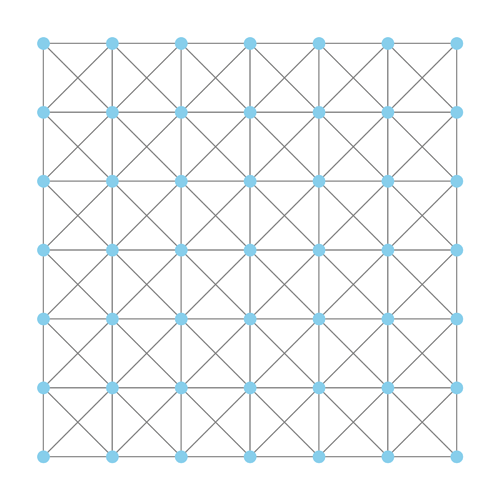

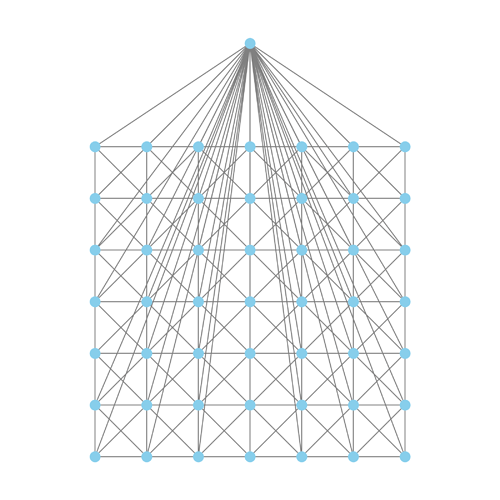

Related: when thinking about network privacy, it’s easy to assume the “outbound” network looks like the left below, but in reality it looks like the right (or worse – less than 7 “honest” connections):

The attacker(s) are actually surrounding you, and they’re connected to almost every node by at least one connection, often outbound (attackers most certainly maintain good uptime and low latency).

On a related note, I want to put out there that I think node implementations should not change their transaction broadcast behavior. Broadcasting to all peers is important for reliability – if e.g. BCHN changed the default to broadcast to fewer peers, consider that attackers could simply black-hole all new transactions (they probably already do), and now the user experience is impacted + the attacker continues getting new timing-based chances to learn about you and the network graph at each re-broadcast attempt.

A better solution is to soil transaction broadcast as a heuristic. Like widespread PayJoin deployment would soil the common-input ownership heuristic, if we keep initial broadcasts as they are + introduce PTX (and maybe DTX) messages, we retain the faster transaction user experience while reducing or eliminating the value of initial broadcast origin tracing.

This is another item that Clover “gets right” but isn’t explicitly mentioned in the paper. (Maybe because Dandelion++ does it too, and the authors consider it obvious.)

Unless I’m mistaken, BCHN is not. That means services using BCHN – Fulcrum servers, most Chaingraph instances, etc. – are also initially broadcasting via to all peers.TX flood

Edit:

My initial post wasn’t correct: transactions are immediately broadcasted to all peers, but only in the next INV for that peer, not as an unsolicited TX. So no, BCHN is not picking just one peer to broadcast new transactions (and should not change that behavior for the reasons described above), but it’s also not immediately blasting TX messages to everyone as I initially said.

(IIRC, my Chaingraph instance regularly reports receipt of unsolicited TX broadcasts from BCHN, but I’m still trying to track down why – it’s possible that’s because it’s mining chipnet and testnet4 blocks? Or possibly just a logging bug; I’ll need to review.)

Hopefully the attack on CashFusion I described above illustrates how incorrect this assumption is.

Network-layer privacy leaks probably de-anonymize most cryptocurrency transactions – even those of “privacy coins”.

I think CashFusion is probably about as private as Monero right now (esp. given Monero’s recently published MAP Decoder attack): ok for casual privacy, but very susceptible to targeted attacks and powerful network adversaries (global dragnet).

I suspect that network-layer attacks have always been the worst privacy problems. E.g. I read between these lines that Zcash probably doesn’t have any intentional backdoors (unless this was intentional), but that experienced privacy experts recognize the fundamentally hard problem of getting strong privacy out of real-time systems.

To illustrate, here’s the conclusion of a recent paper studying attacks on Dandelion, Dandelion++, and Lightning Network: https://arxiv.org/pdf/2201.11860

Our evaluation of the said schemes reveals some serious concerns. For instance, our analysis of real Lightning Network snapshots reveals that, by colluding with a few (e.g., 1%) influential nodes, an adversary can fully deanonymize about 50% of total transactions in the network. Similarly, Dandelion and Dandelion++ provide a low median entropy of 3 and 5 bit, respectively, when 20% of the nodes are adversarial.

Those are some very worrying numbers, and they imply that “on-chain” privacy systems like CashFusion, Zero-Knowledge Proof (ZKP) covenants, etc. can only offer casual privacy without significant additional operational security.

After more thought (here), I think that should remain out of scope for Bitcoin Cash’s P2P network, and DTX messages should just re-use the PTX behavior with much longer parameters: plenty of other networks (Tor, I2P, etc.) are already working on the general problem of real-time network privacy, and we shouldn’t pretend that our real-time anonymity set is competitive. (As far as I know, no cryptocurrency network is even close.) Our time is better spent making it easier for users to use specific Bitcoin Cash software over Tor, I2P, etc.

)

)