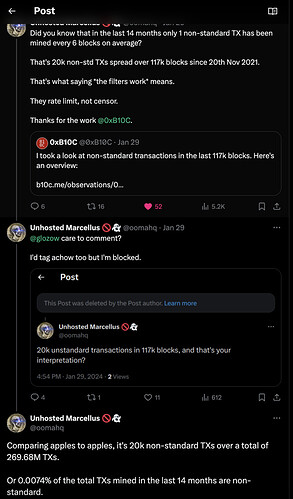

The BTC community are in contention over Ordinals/Inscriptions (which some BTC people don’t like) which has created pushback from some node runners to “#FixTheFilters” (update BTC relay policy to disallow or adjust how easy it is to broadcast these “unwanted” transactions). They’re doing this on an individual node level already, but want to add standardness filter changes to Bitcoin Core (which is controversial of course).

The latest outcome of this is that MARA pool has proactively launched a service to allow people to pay out of band for non standard transactions. So basically allowing the public to get around the filters in advance. It also gives access to everyone to these out of band shenanigans (such as used by Luxor to mine the original “4MB Wizard” of the Ordinals craze), in a similar way to mempool accelerators. It’s called Slipstream. This is ringing alarm bells.

It certainly isn’t a promising sign, but it seems the way the mining industry is going and of course BCH community don’t have much clout or influence there due to low price / hashrate.

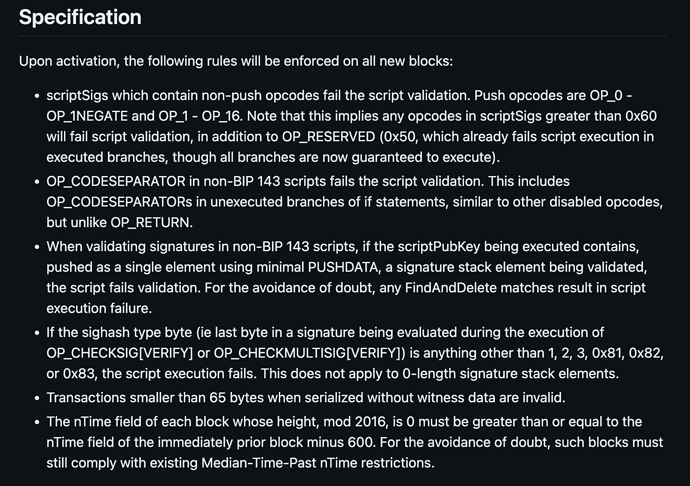

At the same time, suggestions are arising in the BTC community about changes to fix some “non standard vulnerabilities.” See here.

There is already a rough draft 2019 BIP to address some of these issues. See Bip XXX from Matt Corallo. Here are the suggested changes:

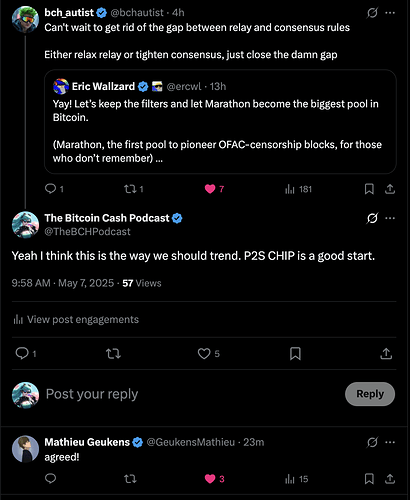

It seems to me that if non standard transactions were “fixed” at the consensus layer, it would completely void any miner out of band relay services (they wouldn’t be necessary or work anymore, unless it was to the extent that the miner wanted to try a 51% attack over it). The BTC side are in for a nightmare to discuss or coordinate such a change, but potentially BCH isn’t.

I am not sure what to make of this. I also can’t really tell to what extent these suggested changes are needed or overlap with the BCH tech. One of the suggestions is to disallow transactions less than 65 bytes, and I know BCH already had a fix to prevent issues around transactions that were 64 bytes, so I would guess perhaps we already have that problem resolved but I can’t tell if the same is true of the other suggested changes.

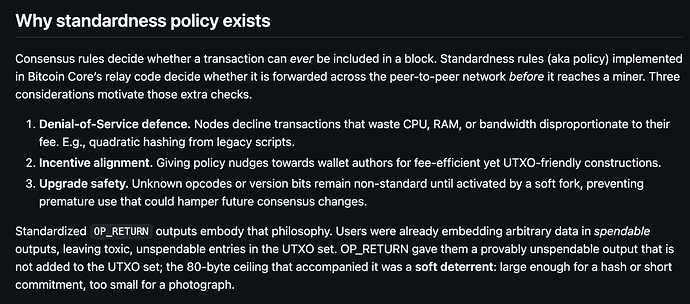

Is this something the BCH community should try to be ahead of the game on? Should we also consider fixing non standard issues by changes straight into consensus? Do we need to, or have we already? Is there a “good reason” for non-standard transactions? Why is there a separation between consensus rules & node relay policies, that’s a bit out of my depth and I’ve never understood it maybe someone else can explain?

Would love some opinions.