Further and further we go towards why TailStorm is so promising.

Moving my reply here to keep on-topic:

I’ll take the opportunity to share the historical ideas on this.

Zero conf is (and always used to be) secure enough for most. It is a risk level that most merchants will be confident with up until maybe $10000. Heavily dependent on the actual price of a unit and the cost of mining a single block (wrt miner assisted ds).

This is like insurance. Historical losses decide the risk profile. Which means, the better we do with merchants not losing money due to double spends, the higher the limit becomes for safe zero-conf payments.

As such, in a world where Bitcoin Cash is actually used for payments the situation you refer to as “requiring a confirmation or more” tends to be the type that doesn’t really care about block time.

They are the situations where you’re giving your personal details and do a bank transfer. They are the situations where you order it online and it will not be delivered today anyway. These are the situations where, in simple words, the difference between 10 minutes and 2 hours confirmations are completely irrelevant.

I don’t disagree at all about the security of 0-conf. Not one bit. Doesn’t mean that we should completely stay away from improving the confirmation experience to impact real world users and situations for the foreseeable future. Not to mention the other benefits.

Nobody disagrees it would be nice. But the cost is outlandish. Would you buy a $500 pair of jeans for a kid that will outgrow them in a year. What about somene buying a $5000 bicycle just to cover the year until it becomes legal to drive?

This is the disconnect that really gets me…

When do you think any such changes are possible to become used by actual people? A TailStorm will take several years before it can be deployed, another couple before it is used by companies (if ever). Reminder that SegWit usage took 7 years to become the majority used address type.

So, you’re advocating ideas that are intermediary, but can’t possibly be in the hands of users in less than 5 years… See how that is a contradiction?

I’m not stopping you, I’m just realistic about what can be done and what gives the best return on investment.

But it has come to the point that it needs to be clarified that this series of ideas is mostly just harmful for BCH at this point. If it stayed on this site it wouldn’t be harmful, but a premature idea that nobody endorses is being pushed in the main telegram channels daily, is pushed on reddit and on 𝕏. The general public thinks this is happening. While not a single stakeholder is actually buying into this.

Hell, there isn’t even any actual reason given for this shortening that stands up to scrutiny.

That is mostly on @bitcoincashauthist, but you both are not listening to stakeholders and just marching on. Again, if its just here, that’s no problem. It is the going to end-user locations with this that is giving a completely different impression.

Tailstorm would offer benefits immediately upon activation, depending on implementation it can immediately:

- reduce variance so that 95% of the time you have to wait less than 13 minutes for 1-conf (vs 47 minutes now).

- reduce block time so that 95% of the time you have to wait less than 1 minute for 1-conf.

Based on my research I think there are 3 ways (edit: 4 actually) to improve confirmation time:

| effect | plain block time reduction | plain subblocks | “inner” Tailstorm | “outer” Tailstorm |

|---|---|---|---|---|

| Reduced target wait time variance (e.g. for 10 or 60 min. target wait) | Y | Y | Y | Y |

| Increased TX confirmation granularity | 1-2 minutes | Opt-in, 1-2 minutes | Opt-in, 10-20s | 10-20s |

| Requires services to increase confirmation requirements to maintain same security | Y | N | N | Y |

| Legacy SPV security | full | 1/K | 1/K | near full |

| Breaks legacy SPV height estimation | Y | N | N | Y |

| Increases legacy SPV overheads (headers) | Y | N | N | Y |

| Selective opt-in “aux PoW” SPV security | N | Y | Y | N |

| Breaks header-first mining every Kth (sub)block | N | Y | Y | Y |

| Additional merkle tree hashing | N | Y, minimal if we’d break CTOR for summary blocks | Y, minimal if we’d break CTOR for summary blocks | minimal |

| Increased orphan rate | Y | Y | N | N |

| Reduces selfish mining and block witholding | N | N | Y | Y |

I could say the same: pretending there’s no confirmation time problem is harmful for BCH.

I can accept this criticism, we’re not yet in the stage where we could hype anything as a solution.

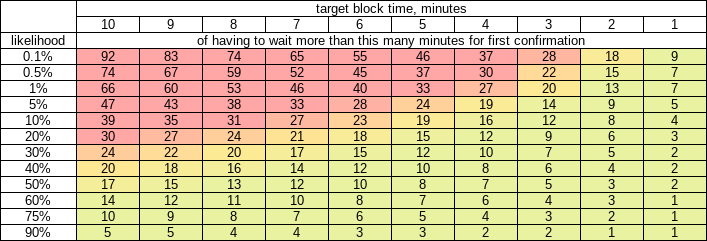

These two tables should be sufficient reason, unless you will hand-wave the need to ever wait for any confirmations.

Table - likelihood of first confirmation wait time exceeding N minutes

Table - likelihood of 1 hour target wait time exceeding N minutes

This is a great summary – thank you.

Might be helpful to tag this consolidated reply back to the TailStorm chain too.

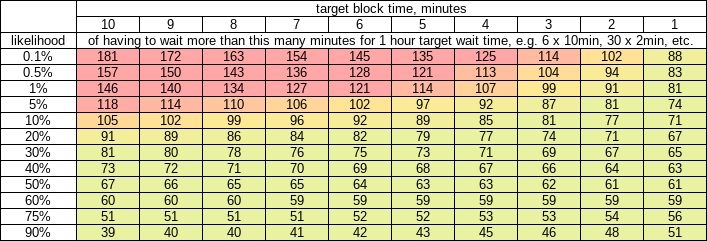

I made a schematic to better illustrate this idea, it would be just like speeding up blocks, but in a way that doesn’t break legacy SPV:

It would preserve legacy links (header pointers) & merkle tree coverage of all TXs in the epoch.

This doesn’t break SPV at all, it’d be just as if price did 1/K and some hash left the chain.

Legacy SPV clients would continue to work fine (at reduced security) with just the legacy headers.

However they could be upgraded to fetch and verify the aux PoW proofs just for most recent blocks, to prove that the whole chain is being actively mined with full hash.

So, the increased overheads drawback of just accelerating the chain would be mitigated by this approach, too.

ok, now your only advantage left is to have more consistent block-times, what about not having all this tailstorm and all this complexity but just allow a more advanced p2peer way of mining.

Specifically I’m thinking that a p2peer setup may be extended to be cumulative.

P2Peer is today a mining standard that fits in the current consensus rules and allows miners with a partial proof-of-work to update the to-be-mined block with a new distribution of the rewards and such.

Where it differs with your suggested approach is that, simply said, you lower the number to throw on a 20-sided dice to be 16 instead of 20, but you requires a lot more of them to compensate.

This is the simple and conceptual difference that you claim is the reason that tailstorm has more consistent blocktimes.

My point is, if that is your argument you should dress it down and make those 20 (or whatever number) of block-headers be shipped in the block and make the consensus update to allow that.

Minimum change, direct effect.

I dislike the soft-fork lying changes that say it is less of a change because it hides 90% of the changes from validating peers. That is a lie, plain and simple. It is why we reject segwit, it is why we prefer clean hard forks. As such, a simple and minimum version of what you propose (a list of proof of work instead of a single proof of work) is probably going to be much easier to get approved.

A simple list of 4 to 8 bytes per POW-item, one for each block-id that reached the partial required PoW, can be added in the beginning of the block (before the transactions) to have this information.

The block-header would stay identical, the difficulty, the merkle etc are all shared between POW items and the items themselves just cover the nonce and maybe the timestamp-offset. (offset, so a variable-size for that one)

The main downside here is that a pool changing the merkle-root loses any gained partial PoW, as such while confirmations may be much more consistent, the chance of getting into the next block drops and most transactions should expect to be in block+2.

Reasonably simple changes:

- block format changes slightly. Some bytes added after the header.

- A simple header can no longer be checked to have correct PoW without downloading the extra maybe 200 bytes. Which should be included in the ‘headers’ p2p calls.

- Miner software should reflect this, though there is no need to actually follow this, they just need one extra byte for the number of extra nonce-s.

- blockid should be calculated over the header PLUS the extra nonces. And next block thus points back to the previous one PLUS the extra nonces. Which has the funny side-effect of the block-ids no longer starting with loads of zero’s

New block-header-extended:

- Current 80 bytes header: Block Header

Used to calculate the PoW details over, what we always called block-id is now called ‘proof-hash’. - A start-of-mining timestamp. (4 bytes unsigned int)

- Number of sub-work items. (var-int)

This implies the sub-item targets. If there are 10, then the target of PoW is adjusted based on that. Someone do the math to make this sane, please. - Subwork: nonce (4 bytes)

- Subwork: time-offset against ‘start of mining’. (Var-int).

This entire dataset is to be hashed to become the block-id which is used in the next block to chain blocks.

To verify one takes the final blockheader and hashes that to get the work. Then for each sub-work item in the list replace in the final block-header the nonce and the time. The time is to be replaced by taking the ‘start-of-mining’ timestamp and adding to that the offset. After that hash the new 80 bytes header to get the work and add this to the total work done.

Now, I’m not suggesting this approach. It is by far the best way to do what tailstorm is trying to do without all the downsides, but I still don’t think it is worth the cost. But that is my opinion.

I’m just saying that if you limit your upgrade to JUST this part of tailstorm, it will have a hugely improved chance of getting accepted.

That’d be the only immediate advantage. However, nodes could extend their API with subchain confirmations, so users who’d opt-in to read it could get more granularity. It’d be like opt-in faster blocks from userspace PoV.

This looking like a SF is just a natural consequence of it being non-breaking to non-node software. It would still be a hard fork because:

- We’d HF the difficulty jump from K to 1/K. To do this as a SF would require intentionally slowing down mining for the transition so difficulty would adjust “by itself”, and it would be a very ugly thing to do. So, still a HF.

- Maybe we’d change the TXID list order for settlement block merkle root, not sure of the trade-offs here, definitely a point to grind out, the options I see:

- Keep full TXID list in CTOR order when merging subblock TXID lists. Slows down blocktemplate generation by the time it takes to insert the last subblock’s TXIDs.

- Keep them in subblock order and just merge the individual subblock lists (K x CTOR sorted lists), so you can reuse bigger parts of subblock trees when merging their lists.

- Just merge them into an unbalanced tree (compute new merkle root over subblock merkle roots, rather than individual TXs).

Just to make something clear, the above subchain idea is NOT Tailstorm. What really makes Tailstorm Tailstorm is allowing every Kth (sub)block to reference multiple parents + the consensus rules for the incentive & conflict-resolution scheme.

With the above subchain idea, it’s the same “longest chain wins, losers lose everything” race as now, it is still fully serial mining, orphans simply get discarded, no merging, no multiple subchains or parallel blocks.

Nice thing is that the above subchain idea is forward-compatible, and it could be later extended to become Tailstorm.

Sorry but all that looks like it would break way more things and for less benefits, but I’m not sure I understand your ideas right, let’s confirm.

First, a note on pool mining, just so we’re on the same page: When pools distribute the work, a lot of work will be based off same block template (it will get updated as new TXs come in, but work distributed between updates will commit to same TXs). Miners send back lower target wins as proof they’re not slacking off and they’re really grinding to find the real win, but such work can’t be accumulated to win a block, because someone must hit the real, full difficulty, win. Eventually 1 miner will get lucky and win it, and his reward will be redistributed to others. He could try cheat by skipping the pool and announcing his win by himself, but he can’t hide such practice for long, because the lesser PoWs serve the purpose of proving his hashrate, and if he doesn’t win blocks as expected based on his proven hash the pool would notice that he suspiciously has a lower win rate than expected.

Now, if I understand right, you’re proposing to have PoW be accumulated from these lesser target wins - but for that to work they’d all have to be based off same block template, else how would you later determine exactly which set of TXs the so accumulated PoW is confirming?

I think it would work to reduce variance only if all miners joined the same pool so they all work on the same block template so the work never resets, because each reset increases variance. Adding 1 TX resets the progress of lesser PoWs. Like, if you want less variance you’d have to spend maybe first 30 seconds to collect TXs, then lock it and mine for 10min while ignoring any new TXs.

Also, you’d lose the advantage of having subblock confirmations.

And the cost of implementing it would be a breaking change: from legacy SPV PoV, the difficulty target would have to be 1 because of:

SPV clients would have to be upgraded in order to see the extra stuff (sub nonces) and verify PoW, and that would add to their overheads, although the same trick I proposed above could be used to lighten those overheads: you just keep the last 100 blocks worth of this extra data, and keep the rest of header chain light.

yes, very good to avoid, in other words.

If you disagree then the onus of proof lies on you.

You understand right, and the tech spec I added in a later edit last night makes this clear. There is exactly one merkle-root.

You are wrong to say that in order to reduce variance ALL miners must join the same pool for the same reason the opposite of all miners being solo miners is not that there is exactly 1 pool.

Every pool added will already have the effect of reducing variance.

You can suggest that making it mandatory for all miners to join 1 pool is better, but then I’d have to retort with the good old saying that socialism is soo good, it has to be made mandatory.

In other words, don’t force 1 pool, but allow pools to benefit the chain AND the miner.

Actually, this is incorrect, SPV mining doesn’t derive the difficulty (and thus work) from the block-id. There is a specific field in the header for it. I linked the specification in my previous message if you want to check the details.

The details on how it does work is also in the original post. Apologies for editing it, which means you may not have seen the full message in the initial email notification.

Again, not promoting this personally. Just saying that this has the same effective gains as your much more involved system suggestions, without most of the downsides.

I still don’t think this is a good idea, even though the avoidance of subblocks and avoidance of changing difficulty and all the other things are useful, the balance is still not giving us enough benefit.

This led me to wrong conclusion on user experience, should’ve analyzed this better. It’s still Poisson for user wait times.

Yes, the length of the interval you land in will be based on Erlang distribution (20min average) but your average wait time until next block will still be 10min because when you make a TX you can land anywhere in the interval.

So: user wait time can be predicted with Poisson distribution, but the total duration of the block will be given by Erlang - which leads to impression that blocks are “always slow”, e.g. you pull up explorer it’s been 7 minutes since last block - and you will wait 10 more minutes: 17min total for the block but you only had to wait 10 minutes of that 17.

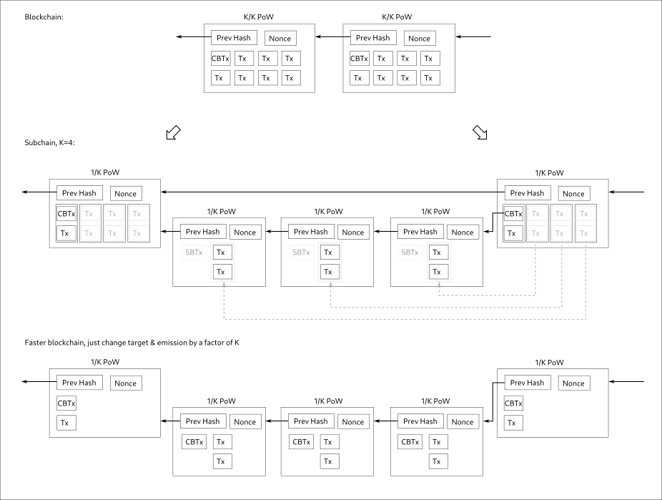

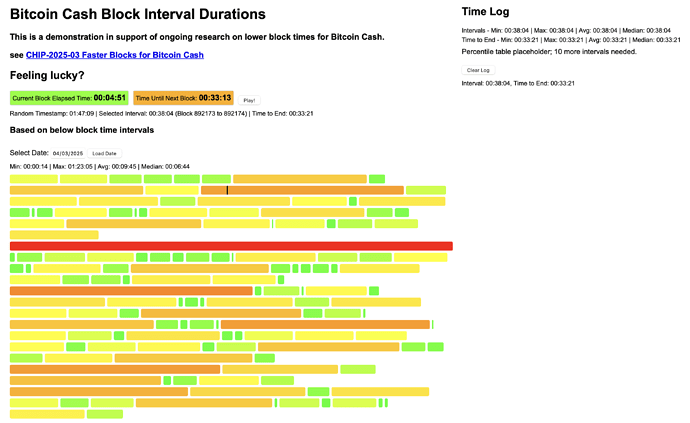

I made a little game to get the intuition for how random all of this is:

And yet… you click 11 times or more and you’ll see the percentiles table converge

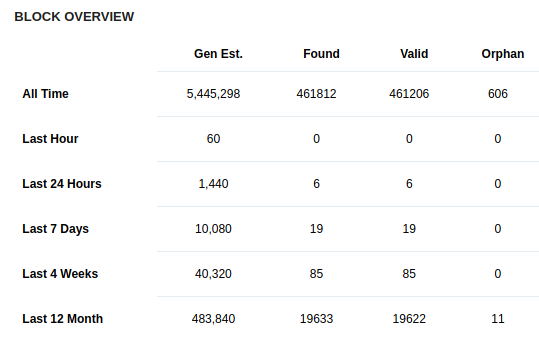

OK so initial search via both search engines and LLMs gave no results.

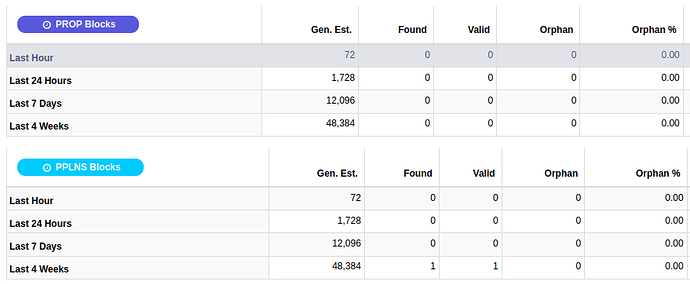

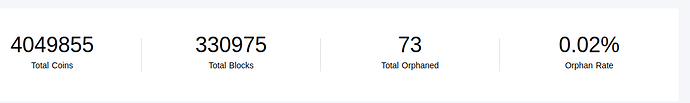

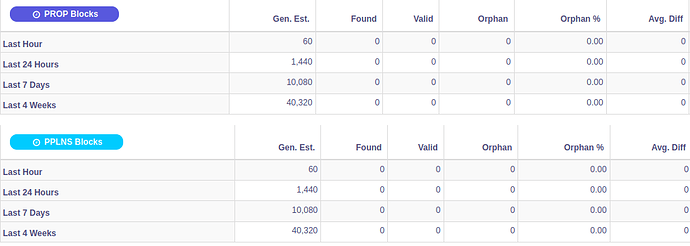

So I went to the source: Biggest mining pools of Feathercoin (1 min blocktime) and Dogecoin (1 min blocktime).

Feathercoin biggest pool:

Feathercoin smallest pool:

Dogecoin biggest pool:

Dogecoin small pool:

Additionally, there does not appear to be a difference between orphan rates for smaller and bigger pools.

ViaBTC has a nice stats page:

| Coin | ViaBTC Hashrate Share | ViaBTC Orphan Rate | Target Block Time, s |

|---|---|---|---|

| BTC | 11.4% | 0.03% | 600 |

| BCH | 20.8% | 0.02% | 600 |

| XEC | 39.1% | 0.06% | 600 |

| LTC | 28.5% | 0.02% | 150 |

| ETC | 1.6% | 0.23% | 15 1 |

| ZEC | 62.8% | 0.03% | 75 |

| ZEN | 52.1% | 0.1% | 150 |

| DASH | 29.2% | 0.05% | 150 |

| CKB | 10.9% | 3.39% | 10 2 |

| HNS | 62.4% | 0.01% | 600 |

| KAS | 12.9% | 0.63% | 0.1 3 |

| ALPH | 16.7% | 0.28% | 16 1 |

1 Orphans reduced with uncle block merging

2 Propose-commit transaction mining scheme propagates blocks with 0.5 * RTT, and adaptive block target time (bounded by 8 and 48 seconds) aims to maintain 2.5% average orphan rate (“NC-MAX”)

3 Orphans reduced due to BlockDAG structure (“GHOSTDAG”)

It is worth noting that once we do tests with 32MB (== 3.2MB with 1min blocktime) blocks with a testnet that spans over multiple continents, the orphan rate might fluctuate more.

Verifying the real-life functionality experimentally is the only way to achieve any sufficient level of certainty regarding this topic.

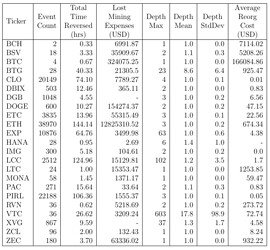

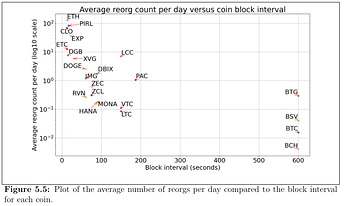

Found some research (JPT Lovejoy, An empirical analysis of chain reorganizations and double-spend attacks on proof-of-work cryptocurrencies (2020)) that monitored subject networks for ~9 months (June 2019 through March 2020) and recorded all orphan events.

We operated the reorg tracker for just over nine months on a single server located in Cam-

bridge, MA. The server ran coin daemons for each coin (except for ETH and ETC) and their

associated tracking processes, a database server and an API server for accessing data. Each

coin daemon or tracking process ran in its own docker container to provide isolation between

their system environments. The coin daemons were not permitted to listen for incoming con-

nections from their peer-to-peer networks and used the software’s default peer management

algorithm to make outgoing connections to other nodes. If permitted by the software, the

coin daemons were run in pruned mode with up to two gigabytes of archival block storage.

They don’t report orphan rate anywhere in the paper, but they report a table showing total orphan count (“Event Count”) for the observation period, and a diagram showing average daily count.

From that, I calculated orphan rates myself, simply by dividing the count by the expected number of blocks during the 9-month observation period.

| Ticker | Name | Target Block Time | Event Count | Orphan Rate |

|---|---|---|---|---|

| BCH | Bitcoin Cash | 600 | 2 | 0.01% |

| BSV | Bitcoin SV | 600 | 18 | 0.05% |

| BTC | Bitcoin | 600 | 4 | 0.01% |

| BTG | Bitcoin Gold | 600 | 28 | 0.07% |

| CLO | Callisto | 13 | 20149 | 1.12% |

| DBIX | Dubaicoin | 89 | 503 | 0.19% |

| DGB | Digibyte | 15 | 1048 | 0.07% |

| DOGE | Dogecoin | 60 | 600 | 0.15% |

| ETC | Ethereum Classic | 13 | 3835 | 0.21% |

| ETH | Ethereum | 13 | 38970 | 2.17% |

| EXP | Expanse | 21 | 10876 | 0.98% |

| HANA | Hanacoin | 90 | 28 | 0.01% |

| IMG | Imagecoin | 60 | 300 | 0.08% |

| LCC | Litecoin Cash | 150 | 2512 | 1.62% |

| LTC | Litecoin | 150 | 24 | 0.02% |

| MONA | Monacoin | 90 | 58 | 0.02% |

| PAC | PAC Global | 187 | 271 | 0.22% |

| PIRL | Pirl | 17 | 22188 | 1.62% |

| RVN | Ravencoin | 60 | 36 | 0.01% |

| VTC | Vertcoin | 150 | 36 | 0.02% |

| XVG | Verge | 30 | 867 | 0.11% |

| ZCL | ZClassic | 75 | 96 | 0.03% |

| ZEC | ZCash | 74 | 180 | 0.06% |

Comparing those numbers with ViaBTC’s:

| Ticker | Name | Target Block Time | Event Count | Network Orphan Rate | ViaBTC Orphan Rate | ViaBTC Hashrate Share |

|---|---|---|---|---|---|---|

| BCH | Bitcoin Cash | 600 | 2 | 0.01% | 0.02% | 20.8% |

| BTC | Bitcoin | 600 | 4 | 0.01% | 0.03% | 11.4% |

| ETC | Ethereum Classic | 13 | 3835 | 0.21% | 0.23% | 1.6% |

| LTC | Litecoin | 150 | 24 | 0.02% | 0.02% | 28.5% |

| ZEC | ZCash | 74 | 180 | 0.06% | 0.03% | 62.8% |

We can see that where ViaBTC is dominant, it has a lower orphan rate than the network, and where it is not, it has an equal or greater rate. This is consistent with the probabilistic model (a pool’s orphan rate is approximately the probability of the rest of the network finding a block during that pool’s propagation delay, while the network’s orphan rate is the hashrate-weighted average of all pools’ orphan rates).

When looking at the data above, one has to remember: a more centralized network will have a lower orphan rate! And this is not because of some relative connectivity advantage. Even if all pools have the same connectivity, it will be skewed due to hashpower advantage – because the probability of finding a block during the propagation window depends on hashpower, and the more you have, the less others have, and the less chance they’ll find a competing block in the propagation window.

On one extreme, a network mined 100% by one pool will have an orphan rate of 0% no matter the target block time. On the other, a network mined by 1,000 pools, each having exactly 0.1% share and the same latency between each other: every pool’s orphan rate would match the network’s.

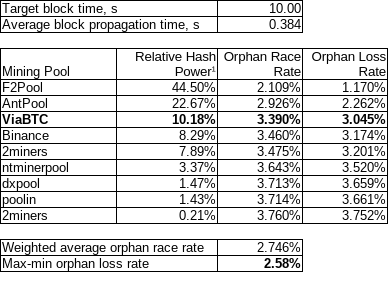

To illustrate, here’s what the model shows for Nervos (I did this calculation a while ago, taking a different snapshot):

F2Pool’s orphan loss rate (1.17%) is 2.6× lower than the smallest pool’s (3.75%). This means F2Pool keeps ~98.8% of its blocks while the smallest pool keeps only ~96.2%. Over time, this compounds into a centralizing force: larger pools are more profitable per hash, attracting more hashrate.

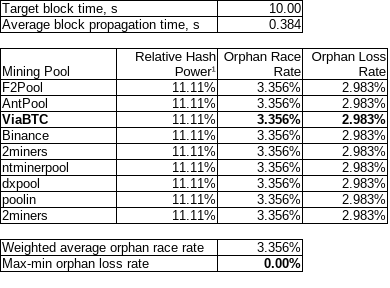

But, if everyone had the same hashrate:

Network orphan rate increases, but relative differences disappear.

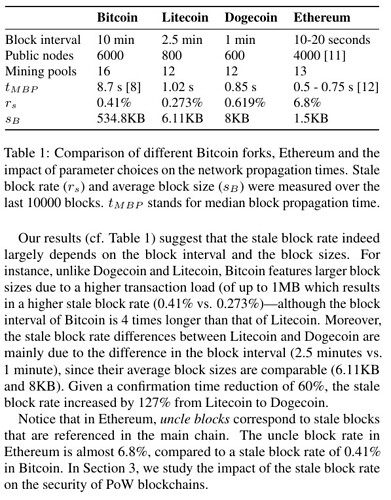

Found one more paper, older research:

Gervais et.al., ETH Zurich, “On the Security and Performance of Proof of Work

Blockchains” (2016)

Note that this was before compact block relay!

Look at those propagation times: 8.7s, 1.02s, 0.85s!

Now it’s all mostly under 0.2s

Ok so larger pools do have a default (centralising) advantage because smaller pools are less likely to find a block during the propagation window. That makes sense.

Is there any mitigation for this (I guess longer blocktimes, reducing the advantage relative to difficulty. Better pool connections maybe? Anything else?)? Better implementation and rollout of compact blocks? If we had a full list of mitigations, we could then present them along with the current industry rollout and expected cost/benefit of doing so.

And I guess also obviously it’s a question of what kind of centralising advantage we are prepared to accept relative to the utility of faster blocks for users.

Yup, but the CHIP is actually very conservative, we set 2% as the tolerable threshold for a minority pool’s orphan rate and then show that 1-min blocks can stay under even in worst case (0% mempool sync, full block download). Assuming BTC’s hashpower distribution the smallest pool would only have a revenue gap of 1% compared to the top pool - in that same worst case, and there the network’s rate would be ~1.67%. Judging by DOGE it’s likely that typically it would be 0.15% or lower while our blocks are empty, and maybe at 50% network capacity they’d go up to 0.5%.

Satoshi thought even 10% is tolerable.

If propagation is 1 minute, then 10 minutes was a good guess. Then nodes are only losing 10% of their work (1 minute/10 minutes). If the CPU time wasted by latency was a more significant share, there may be weaknesses I haven’t thought of. An attacker would not be affected by latency, since he’s chaining his own blocks, so he would have an advantage. The chain would temporarily fork more often due to latency.

If we used that same 10% criteria, with today’s propagation tech, the math would allow something like 6s blocks

Yeah, uncle block merging like Ethereum or Tailstorm do, or BlockDAG. For a fixed supply coin this would basically mean subsidizing orphan losses. And all that complexity for what? A measly 0.5%-1% gap? Other factors impact pool dominance more than that; else BTC’s distribution would be more uniform, but it is not.

Other than that: just keep improving block propagation. There’s still improvements we can do (removal of cs_main bottleneck, some better relay protocol than compact blocks), which would probably allow us to go below 20s while keeping orphan rates under 2%. Then there’s general Internet infrastructure, where Starlink could bring pings further down, one day allowing us to get down to 6s with 2% – without needing to deviate from classic blockchain structure and Nakamoto consensus.

The hard physical limit is the speed of light across the planet (~130ms round-trip), and we are nowhere near it.