There was a recent discussion about block times and I’d like to revive this topic. I was initially opposed to the idea of changing the blocktime just because I thought it would be too costly and complicated to implement, but what if it wouldn’t? What if the costs would be worth it? I was skeptical about the benefits, too, but I changed my mind on that, too. I will lay it out below.

Obviously we’d proportionately adjust emission, DAA, and ABLA. My main concern was locktime and related Script opcodes, but those are solvable, too.

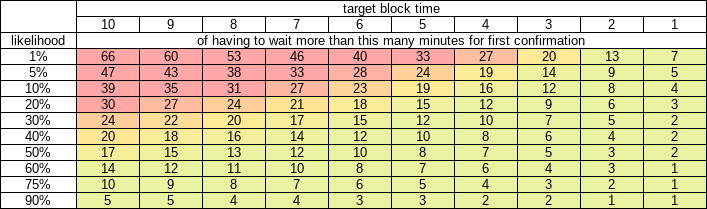

Note: in my original post I used Poisson distribution to calculate probabilities, but that answers the wrong question, it answered the: “How many blocks will be longer than X?”. I have edited this post with updated numbers based on Erlang distribution, which answers the right question: “If I decide to make a TX now, what’s the likelihood of having to wait longer than X for N confirmations?”. More details here.

The 0-conf Adoption Problem

I love 0-conf, it works fantastic as long as you stay in the 0-conf zone. But as soon as you want to do something outside the zone, you’ll be hit with the wait. If you could do everything inside the 0-conf zone, that would be great, but unfortunately for us - you can’t.

How I see it, we can get widespread adoption of 0-conf in 2 ways:

- Convince existing big players to adopt 0-conf. They’re all multi-coin (likes of BitPay, Coinbase, Exodus, etc.) and, like it or not, BCH right now is too small for any of those to be convinced by our arguments pro 0-conf. Maybe if we give it 18-more-months™ they will start accepting 0-conf? /s

- Grow 0-conf applications & services. This is viable and we have been in fact been growing it. However, growth on this path is constrained by human resources working on such apps. There’s only so many builders, and they still have to compete for users with other cryptos, services from 1., and with fiat incumbents.

We want to grow the total number of people using BCH, right?

Do our potential new users have to first to go through 1. in order to even try 2.? How many potential users do we fail to convert if they enter through 1.? If user’s first experience of BCH is through 1. then the UX suffers and maybe the users will just give up and go elsewhere, without bothering to try any of our apps from 2.

Is that the reason that, since '17, LTC’s on-chain metrics grew more than BCH’s?

In any case, changing the block time doesn’t hamper 0-conf efforts, and if it would positively impact the user funnel from 1. to 2. then it would result in increase of 0-conf adoption, too!

What about Avalanche, TailStorm, ZCEs, etc.?

Whatever finality improvements can be done on top of 10-minute block time base, the same can be done on top of 2-minue block time base.

Even if we shipped some improvement like that - we would still have to convince payment processors etc. to recognize it and reduce their confirmation requirements.

This is a problem similar to our 0-conf efforts. Would some new tech be more likely to gain recognition from same players who couldn’t be convinced to support 0-conf?

How I see it, changing the block time is the only way to improve UX all across and all at once, without having to convince services 1 by 1 and having to depend on their good will.

Main Benefits of Reducing Block Time to 2 Minutes

1. Instant improvement in 1-conf experience

Think payment processors like BitPay, ATM operators, multi-coin wallets, etc. Some multi-coin wallets won’t even show incoming TX until it has 1 conf! Imagine users waiting 20 minutes and thinking “Did something go wrong with my transfer?”.

BCH reducing the block time would result in automatic and immediate improvement of UX for users whose first exposure to BCH is through these services.

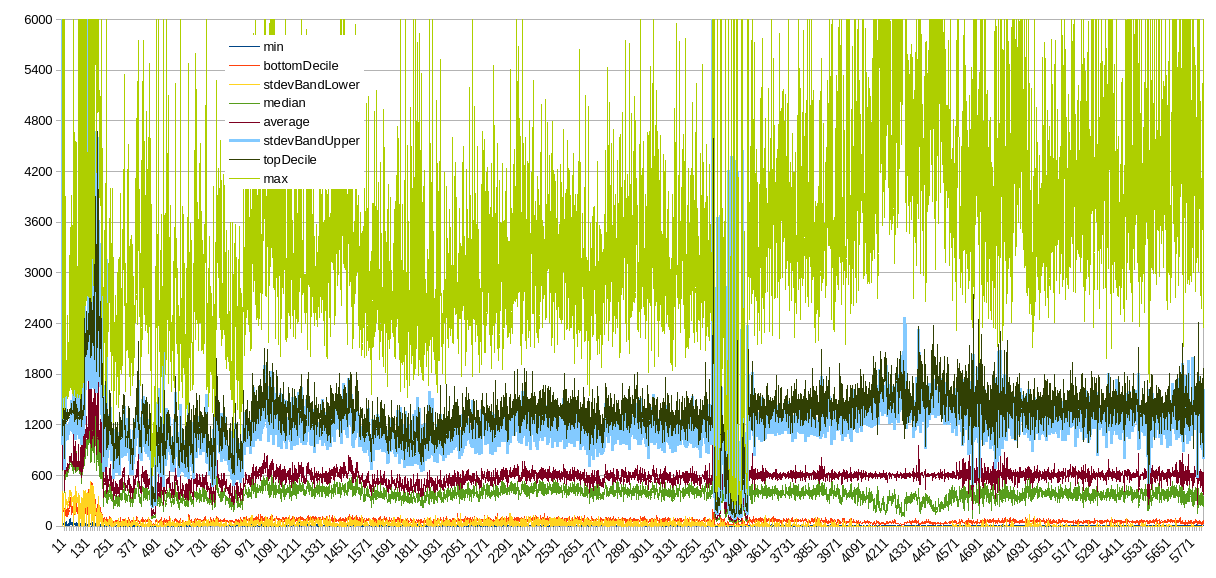

With a random process like PoW mining is, there’s 75% chance you’ll have to wait more than the target 10 minutes (Erlang distribution) in order to get that 1 confirmation, and 40% chance of having to wait more than double the target time (20 minutes) for that 1 confirmation.

Table - likelihood of first confirmation wait time exceeding N minutes

Specific studies for crypto UX haven’t been done but maybe this one can give us an idea of where the tolerable / intolerable threshold is:

A 2014 American Express survey found that the maximum amount of time customers are willing to wait is 13 minutes.

So, there’s a 60% chance of experiencing intolerable wait time every time you use BCH outside the 0-conf zone!

What about really slow experience, like 3 times the target (10 minutes). Chances of that are 20%, and after 1 week of daily use there’s a 79% chance you’ve had to wait for at least one “outlier” block of 30 minutes or more.

If you’re a newbie, you may decide to go and complain on some social media. Then you’ll be met with old-timers with their usual arguments “Just use 0-conf!”, “It’s fixable if only X would do Y!”. How will that look like from perspective of new users? Also, if we somehow grow the number of users, and % will complain, then the number of complainers will grow as well! Who will meet and greet all of them? It becomes an eternal September problem.

Or, you’ll get on general crypto forum and people will just tell you “Bruh, BCH is slow, just go use something else.”. How many potential new users did we silently lose this way?

With 2-minute blocks, however, there’d be only a 1% chance of having to wait more than 13 minutes for 1 confirmation! In other words, 99% blocks would fall into the tolerable zone, unlikely to trigger an user enough to go and complain or look for alternatives.

2. Instant improvement in multi-conf experience

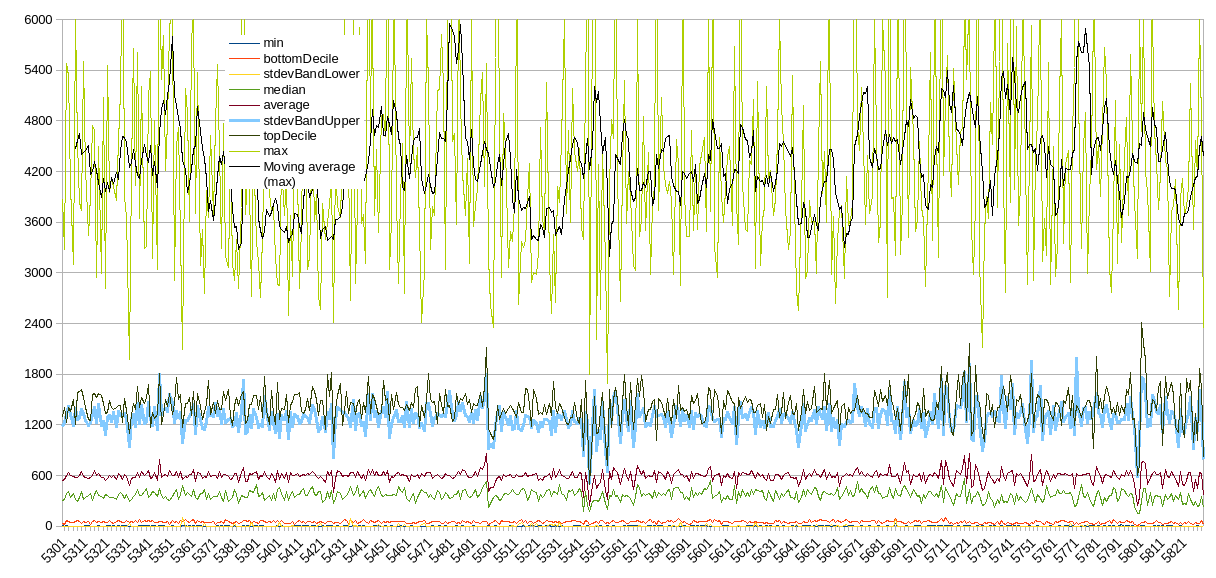

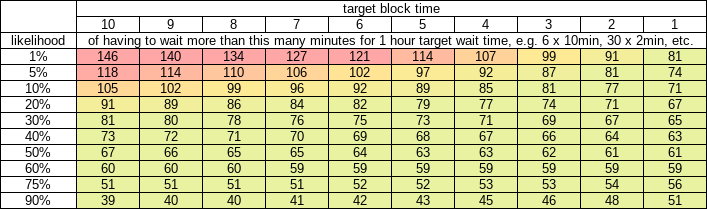

Assume that exchanges will keep target wait time of 1 hour, i.e. require 6 x 10-min confirmations or 30 x 2-min confirmations. On average, nothing changes, right? Devil is in the details.

Users don’t care about aggregate averages, they care about their individual experience, and they will have expectations about their individual experience:

- The time until something happens (progress gets updated for +1) will be 1 hour / N.

- The number of confirmations will smoothly increase from 0 / N to N / N.

- I will have to wait 1 hour in total.

How does the individual UX differ from those expectations, depending on target block time?

- See 1-conf above, with 10-min target the perception of something being stuck will occur more often than not.

- Infrequent updating of progress state negatively impacts perception of smoothly increasing progress indicator.

- Variance means that with 10-min blocks the 1 hour will be more often exceed by a lot than with 2-min blocks.

Table - likelihood of 1 hour target wait time exceeding N minutes

Note that even when waiting 80 minutes, the experience will differ depending on target time: with 10 min the total wait may exceed 80 min just due to 1 extremely slow block, or 2 blocks getting “stuck” for 20 minutes each. With 2 min target, it will still regularly update, the slowdown will be experienced as a bunch of 3-5min blocks, with the “progress bar” still updating.

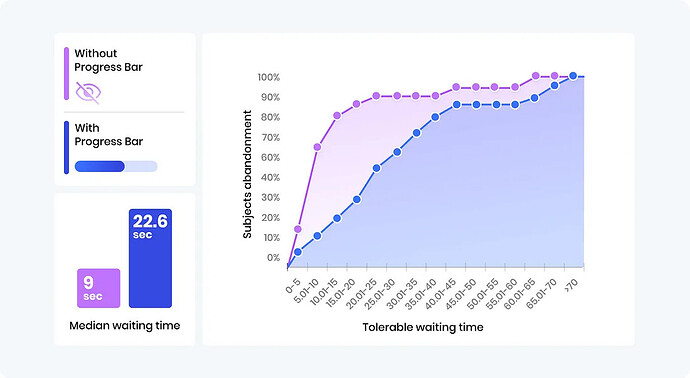

This “progress bar” effect has noticeable impact on making even a longer wait more tolerable:

(source)

This study was for page loading times where expected waiting time is much lower so this is in seconds and can’t directly apply to our case, but we can at least observe how the progress bar effect increases tolerable waiting time.

3. DeFi

While our current DeFi apps are all working smoothly with 0-conf, there’s always a risk of 0-conf chains getting invalidated by some alternative TX or chain, either accidentally (concurrent use by many users) or intentionally (MEV).

But Would We Lose on Scalability / Decentralization?

During the discussion on Telegram, someone linked to a great past discussion on Reddit, where @jtoomim said:

The main concern I have about shortening the block time is that shorter block times reduce the capacity of the network , as they make the block propagation bottleneck worse. If you make blocks come 10x as fast, then you get a 10x higher orphan rate. To compensate and keep the network safe, we would need to reduce the block size limit, but decreasing block size by 10x would not be enough. To compensate for a 10x increase in block speed, we would need to reduce block size by about 20x. The reason for this is that block propagation time roughly follows the following equation:

block_prop_time = first_byte_latency + block_size/effective_bandwidth

If the block time becomes 10x lower, then block_prop_time needs to fall 10x as well to have the same orphan rate and safety level. But because of that constant first_byte_latency term, you need to reduce block_size by more than 10x to achieve that. If your first_byte_latency is about 1 second (i.e. if it takes 1 second for an empty block to be returned via stratum from mining hardware, assembled into a block by the pool, propagated to all other pools, packaged into a stratum job, and distributed back to the miners), and if the maximum tolerable orphan rate is 3%, then a 60 second block time will result in a 53% loss of safe capacity versus 600 seconds, and a 150 second block time will result in an 18% loss of capacity.

(source)

So yes, we’d lose something in technological capacity, but our blocksize limit floor is currently at 32 MB, while technological limit is at about 200 MB, so we still have headroom to do this.

If we changed block time to 2 minutes and blocksize limit floor to 6.4 MB in proportion - we’d keep our current capacity the same, but our technological limit would go down maybe to 150 MB.

However, technology will continue to improve at same rates, so from there it would still continue to improve as network technology improves, likely before our adoption and adaptive blocksize limit algorithm would get anywhere close to it.

What About Costs of Implementing This?

In the same comment, J. Toomim gave a good summary:

If we change the block time once, that change is probably going to be permanent. Changing the block time requires quite a bit of other code to be modified, such as block rewards, halving schedules, and the difficulty adjustment algorithm. It also requires modifying all SPV wallet code, which most other hard forks do not. Block time changes are much harder than block size changes. And each time we change the block time, we have to leave the code in for both block times, because nodes have to validate historical blocks as well as new blocks. Because of this, I think it is best to not rush this, and to make sure that if we change the block time, we pick a block time that will work for BCH forever.

These costs would be one-off and mostly contained to node software, and some external software.

Ongoing costs would somewhat increase because block headers would grow by 57.6 kB/day as opposed to 11.52kB/day now.

Benefits would pay off dividends in perpetuity: 1-conf would forever be within tolerable waiting time.

But Could We Still Call Ourselves Bitcoin?

Who’s to stop us? Did Bitcoin ever make this promise: “Bitcoin must be slow forever”? No, it didn’t.

But What Would BTC Maxis Say?

Complaining about BCH making an objective UX improvement that works good would just make them look like clowns, imagine this conversation:

A: “Oh but you changed something and it works good!”

B: “Yes.”

.

. 🫡

🫡